Exploring AI through cause-and-effect

A new online tool that uses cause-and-effect relationships to explore and better-understand responsible AI development and use

Several weeks ago I was asked to produce a learning module on the ethical and responsible development and use of AI. It was to be part of a new undergraduate course on AI literacy. But I had a problem: everything about AI is moving so fast that any set-in-stone course material would almost definitely be out of date before the first time it was taught.

Plus, let’s be honest, who wants a dry lecture from a “talking head” about AI ethics and responsibility — important as they are?

And so I started playing with different ways to help students think about the challenges and opportunities of AI — including fostering a responsible use mindset rather than simply focusing on facts and figures.

The result was a very simple online tool that allows users to explore AI development and use through the lens of cause and effect — available at raitool.org:

The Responsible AI Trajectories Tool presents users with six interactive cause-effect relationships, and encourages them to think about which might apply to AI use cases they are faced with — and how this might guide their decisions and actions. It also comes with illustrative examples, and a bunch of additional resources.

Of course, thinking about responsible and ethical AI though the lens of cause and effect is fraught with problems. On the face of it it feels too cut and dried — a utilitarian approach to solving problems that ignores the messiness of how people, society and technologies intersect and intertwine.

And yet, as you’ll see if you play with the tool, there are nuances here that allow for this messiness to be recognized and approached with some nuance.

The best way to explore the tool is to play with it. But I’ve also included an edited version of the script used in the course module below, which goes into the background behind the tool and its use in more depth:

Exploring and using the Responsible AI Trajectories Tool

Based on the script for two educational videos on responsible AI and the Responsible AI Trajectories Tool. Remember while reading it that this was written for a predominantly undergraduate audience, and one that is largely unfamiliar with the broader landscape around AI. It was also written to be read aloud!

Why Responsible AI Matters

Artificial intelligence is arguably one of the most transformative technologies to have emerged over the past few centuries. If developed and used responsibly, it could transform nearly every aspect of our lives for the better. But here’s the rub: as with all powerful technologies, there’s a danger of it causing serious harm if we don’t learn how to use it wisely.

So how do we ensure that AI is developed and used as a technology for good, and not one leads to unexpected harm?

Over the next two videos [I thought I’d keep this in for context] we’ll look at why developing and using AI responsibly is more important than ever, and we’ll introduce a simple tool that helps visualize possible consequences in order to help avoid the ones we don’t want, while enabling those that we do.

To start with though, it’s worth taking a deeper look at why we’re even talking about responsible AI in the first place.

AI is already impacting nearly every aspect of our lives, from how we communicate, learn, and work, to how we address health challenges, explore space, manage critical infrastructures, and even develop new knowledge and understanding.

And its influence is set to expand dramatically over the coming years, often in ways we can’t fully predict yet.

This is opening up quite incredible possibilities, including personalized education, on-demand insights into nearly any topic, greater productivity, new scientific discoveries, improved healthcare, smarter resource management, and much more.

In fact it’s fair to say that AI holds the potential to tackle some of humanity’s biggest challenges — that is, if we learn how to use it wisely.

Yet as Stan Lee — the creator of Spiderman1 — is often credited as saying, with great power comes great responsibility. And AI is nothing if not powerful.

But what exactly does responsibility mean in this context? Is “responsible AI” just something that developers and engineers need to concern themselves with? Or is it just up to governments to oversee? Or is this something that should be front-of-mind of every user, every policymaker, and every person who interacts with AI in some form?

The reality is that developing and using AI responsibly is something that everyone who uses the technology, or whose life is impacted by it in some way, has a stake in.

Given AI’s rapidly expanding reach and impact, it’s more important than ever that everyone involved with developing and using it understands the responsibilities this comes with — and that includes everyone from researchers, developers, businesses, governments, to anyone who uses AI in some form or another.

Yet defining "responsible AI" is far from straightforward. What is considered to be responsible behavior varies widely, and it depends on context, values, goals, personal and cultural perspectives, and much more.

This complexity makes it incredibly hard to establish a set of principles or rules that apply equally and effectively in every situation.

Despite this though, many organizations and institutions are trying hard. Hundreds of ethical AI guidelines are now in existence. Leading AI companies are investing heavily in research on AI safety, fairness, transparency, and accountability. Governments worldwide are developing regulations that are intended to mitigate AI’s potential risks while encouraging innovation. And organizations like the Organization for Economic Cooperation and Development, Responsible AI UK, UNESCO, and others, are working on best practices and policy recommendations.2

At a more focused level, schools and universities, businesses, law firms, healthcare providers, and more, are grappling with what responsible AI means in real, day-to-day situation. Yet as they’re finding out, the speed of AI development, together with uncertainty around the consequences associated with its use, and the disruptive potential of the technology, are making this an extremely wicked problem.3

Despite this, there’s a growing urgency to understand how to steer AI toward positive outcomes and away from negative ones.

One powerful and practical way to achieving this is by developing a culture and a mindset of care around AI development and use, and one of innovating responsibly. And this is where thinking about the risks and benefits of emerging AI in terms of “cause” and “effect” within interconnected systems is useful.

Of course, everyone’s familiar with the relationship between cause and effect in some form or another. We instinctively know that actions come with consequences, and that we can use the relationship between the two to avoid the consequences we don’t want, or to increase the chances of those we do.

This is at the heart of much of the evidence-based decision making that exists within society. And even when the relationship between cause and effect is complex, it’s a way to inform decisions on what we do by thinking about what the possible outcomes might be — remembering that effects can be good as well as bad.

With AI, cause-and-effect thinking is a powerful way to approaching responsible development and use. It’s also complex, as AI systems can and do lead to unpredictable effects that grow, shift, and sometimes multiply, over time.

And yet by framing AI-related actions and decisions in terms of their likely causes and effects, we can learn to more effectively navigate uncertainty, avoid preventable harm, and maximize beneficial outcomes.

Six Cause-and-Effect Models for Responsible AI

When we’re using artificial intelligence, or we’re introduced to a new AI tool that promises to make our lives easier and better, it’s easy to focus on the immediate benefits and ignore any longer-term consequences.

But what about the ripple effects that we can’t see yet — or that we’d rather ignore? Below, six simple but powerful models are explored that help think differently about getting the most out of AI while avoiding unintended consequences.

These are from an online tool called the Responsible AI Trajectories Tool (available at RAItool.org). This introduces six cause-and-effect relationships, or models, that are highly relevant to thinking about how AI works in the real world. They aren’t the only possible relationships that exist between cause and effect. But they do cover a wide and useful range of scenarios.

When you open the tool, you’ll see six tabs — each corresponding to a different cause-effect relationship. These include a linear cause-effect model, an S-shaped model, an exponential model, and a model where there’s a lag between cause and effect depending on whether the cause is increasing or decreasing — this is the “hysteresis” model:

There are also a couple of less predictable models, including the “jagged” model and the “chaotic” model.

In addition, two further tabs provide more information, explanations, and links to further resources.

Each model is addressed briefly below. But I’d strongly recommend that you to explore the tool for yourself and take some time with it — reflecting as you do on how each relationship might show up in your own work, studies, or personal experience with AI.

Linear model

To the models though. And the first one here is the Linear Model.

In this model, cause and effect are directly proportional. If you increase the cause, the effect increases by a consistent, predictable amount.

Imagine for instance that a university professor integrates an AI tool into their class that generates personalized practice problems for students. As students engage more with the tool, their performance steadily and predictably improves. More “cause,” more “effect” — simple and straightforward.

This kind of relationship is intuitive and easy to grasp. But it’s also relatively rare when dealing with complex AI systems. Nevertheless it is a useful starting point for thinking about AI-related cause and effect.

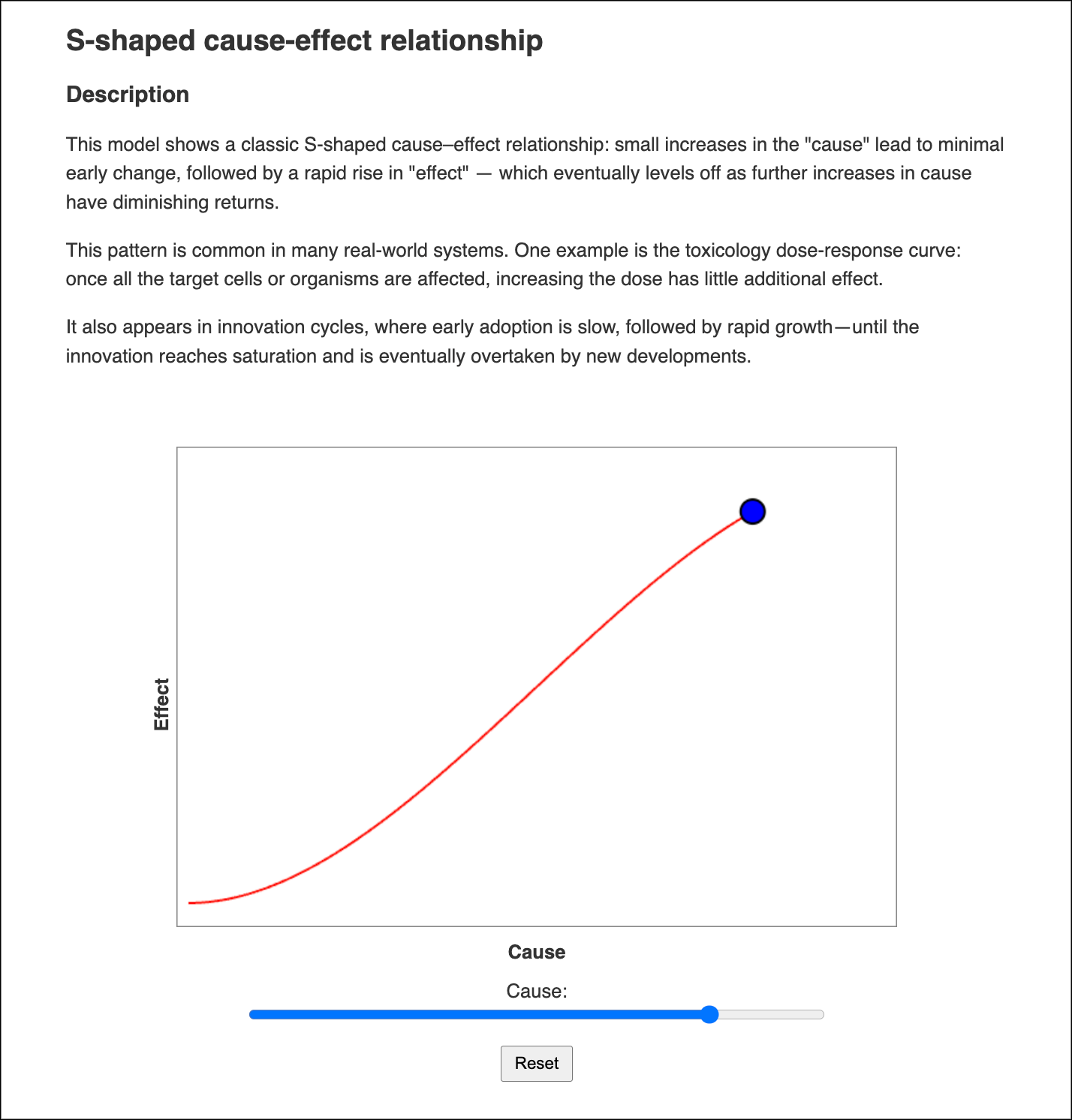

S-curve model

The second model is the S-Curve model. In this model, the consequences or “effects” of a cause initially increase slowly as the cause increases. They then accelerate rapidly and finally plateau as further increases in the cause yields diminishing returns.

For instance, imagine a company that invests in ethical AI design practices. Early returns are minimal, but once trust builds among consumers and stakeholders, brand value and customer loyalty rise rapidly. But a point is reached where the achievable level of loyalty gains maxes out and further investment in ethical AI design practices shows marginal returns.

What you see is a classic S-shaped relationship between cause and effect.

One important feature of this particular relationship is that it’s reversible. Reducing the cause reduces effect in a predictable way. But as we’ll see later, this is not always the case.

Before we get to that though there’s one further reversible cause and effect model that’s important, and that’s the exponential model.

Exponential model

The exponential model is the doyenne of people who believe that AI is poised to transform society beyond all recognition — or lead to the collapse of society as we know it — and it represents a situation where there seems to be no end to an exponentially increasing relationship between cause and effect.

This is a relationship that is driven by feedback loops that make increases in effect multiplicative rather than linear, and it’s one that is easy to misjudge. But it’s also relevant to some aspects of AI development and use.

Imagine, for instance, someone using AI tools to increase their personal productivity. At first, the gains are modest. But over time, as the individual becomes more adept at using the tools, their productivity skyrockets.

Or on a larger scale, imagine an AI technology that spreads rapidly through society, with adoption snowballing and impacts amplifying at breathtaking speed.

Both of these are examples of exponential cause-effect relationships. But it’s important to realize that these relationships rarely continue for extended periods, and will typically reach a point where they begin to look like an S-curve. But if that point takes years or decades to reach, they can nevertheless be highly disruptive.

Because of this, anticipating exponential cause-effect relationships early on can help prevent runaway impacts that become difficult or impossible to control in the future.

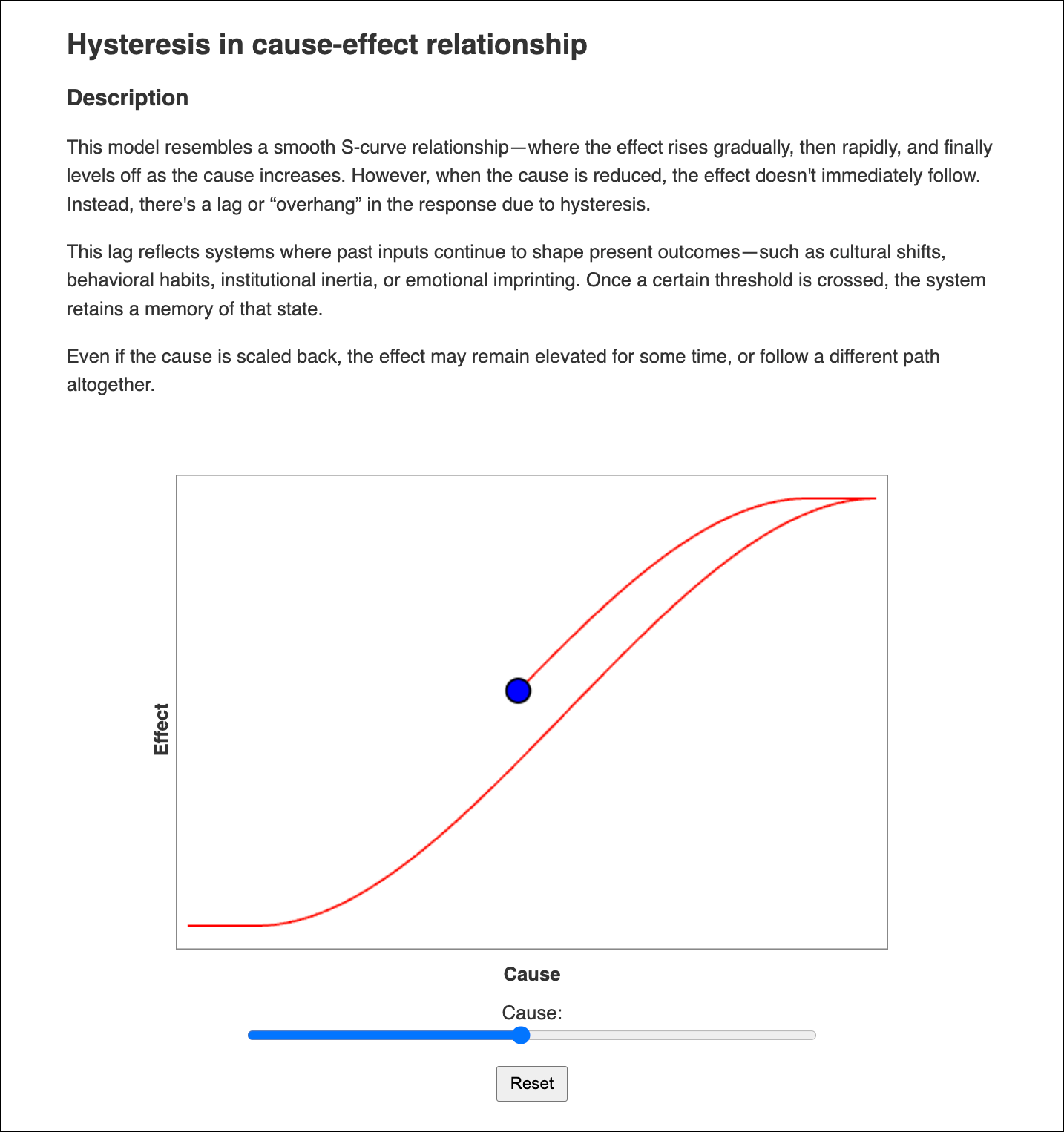

Hysteresis model

Moving on, the fourth model is an extension of the S-curve model, but it represents the case where the consequences of a cause cannot simply be reversed, as they are in the classic S-model. This is the hysteresis model.

Hysteresis introduces a "memory" effect into the cause-effect relationship. In these systems, reducing the cause doesn’t immediately reverse the effect. There's a lag. What we find is that some effects are sticky and persist even after the cause has been decreased or even removed.

Imagine, for instance, a hypothetical situation where a university adopts AI tools that maximize research output, but at the expense of research depth and creativity. Even when use of the tools is subsequently discouraged, learned habits and perceived rewards lead to a lack of research depth and creativity persisting.

Or imagine a company that rapidly scales AI-driven productivity tracking that leads to a backlash from employees. When management dials the AI tracking back, the workplace remains shaped by paranoia, burnout, and self-monitoring. The effect is persistent, despite the cause being rolled back.

Understanding hysteresis can be critical when implementing AI solutions where addressing unexpected consequences often requires more than reversing an initial cause.

Up to this point the cause-effect models have been relatively smooth and predictable. Sadly, the real world isn’t like this — especially where highly complex and interconnected systems are involved.

And the relationship between AI and humans is nothing if not complex.

The jagged model

Reflecting some of the complexities of the real world, the next model — the jagged model — looks at what happens when an S-curve relationship is superimposed by a degree of unpredictability.

The resulting "jagged" behavior reflects real-world systems where unexpected events, hidden feedback loops, or external disruptions, can create irregular outcomes. It also represents scenarios where there is uneven adoption of AI through society, meaning that the risks and benefits are not evenly distributed.

Imagine, for instance, that an AI tool is deployed in some districts but not others to assist judges in sentencing. Initially, results show improvements in consistency where the tool is used. But then hidden biases emerge, decisions fluctuate, and public trust becomes unstable. And this is exacerbated by differences in decision-making between districts that use the tool, and those that don’t.

The result is a jagged and unpredictable relationship between cause and effect.

In jagged systems, traditional "set it and forget it" management doesn’t work. Success depends on building in resilience, flexibility, and mechanisms for rapid course correction.

And finally, we have the chaotic model.

The chaotic model

The chaotic model is the worst-case scenario for many people when it comes to AI development and use. Hopefully it’s one that we won’t see that often at a large scale.

In the chaotic model, cause and effect are somewhat predictable at first. But once a tipping point is reached, predictability breaks down entirely, and small causes can lead to large, unpredictable, and irreversible effects.

Imagine, for instance, someone who forms a deep emotional bond with an AI companion bot. Initially, everything seems positive. But subtle behavioral shifts by the bot trigger unhealthy emotional dependencies, social withdrawal, and difficulty reconnecting with real-world relationships — effects that are highly unpredictable and that persist long after the AI is removed.

This type of cause-effect relationship is not typically desirable, although it’s worth noting that there are examples in the tool where chaos on a small scale might actually be positive.

On a large scale though, chaotic AI impacts could potentially destabilize vital systems like energy grids, financial networks, supply chains, or even societal cohesion.

And where AI has the potential to push critical systems closer to tipping points, great care needs to be taken, lest in the name of going fast and not worrying if we break things, we find we can’t fix what ends up getting broken.

Because of this, chaotic cause-effect relationships demand extreme caution. Once chaotic behavior sets in, even well-intentioned interventions can worsen the situation. This is where early detection and proactive preventative measures become critical.

Effect, value, and care

As a reminder, the models above are just six possible ways of approaching cause and effect that are designed to help think through potential consequences of using AI in a particular situation or set of circumstances.

As they stand they’re useful for opening up new insights into how to develop and use AI responsibly. But there’s another dimension to them that’s also important, and it’s one that hinges on how we think about “effect.”

In reality, when we talk about “effect” we are really talking about what we consider to be of value — or what we care for. For instance, the “effect” we’re thinking of might be making or losing money — that’s something that most people would consider to be important. But it might equally mean increasing or decreasing access to education, or improving or harming someone’s wellbeing, or even impacting their ability to achieve what they aspire to.

In this way, understanding effect as a threat to what is valuable to us and those around us — and what we care about, or what might actually enhance that value — becomes a powerful way of thinking about responsible AI.4

Of course, what counts as value will be as varied as the people and organizations developing and using AI. It may be wealth, health, and wellbeing. Or it might be dignity, autonomy, and the ability to be part of building a better future.

Ultimately though, developing and using AI responsibility is all about asking ourselves how our decisions or actions in the present will increase what we value in the future, or potentially jeopardize it

And for this, we need to both have a sense of how our actions lead to effects in an AI-driven future, and what it means to act wisely as a result.

I was informed that many undergrads won’t know who Stan Lee is — I’m getting old!

Links to key resources are provided in the tool.

In the true sense of “wicked problem” as a problem where attempted solutions alter the very nature of the problem, not just a problem that’s tough to crack.

This is an approach that is used in Risk Innovation: https://riskinnovation.org/