Parasocial Relationships: Problematic Practice or Public Promise?

This year's Cambridge Dictionary Word of the Year is "parasocial"—spurred on by growing concerns over our love affair with AI chatbots

Well, this is awkward.

Back in May I wrote about why parasocial communication is important, and why we need more of it.

Yet despite my enthusiasm, the good folks at Cambridge Dictionary have just declared “parasocial” their 2025 Word of the Year. And they do not cast it in a good light.

Back in May, “parasocial” was still a word that academics were more likely to use than social media influences. At the time, it fit what I was writing about very well—the idea that feelings of connection fostered by candid, authentic and personal communication can enhance the effective and useful flow of information between academics and society writ large.

Here, I suggested that parasocial relationships developed through platforms like blogs, podcasts, and other forms of social media—I used the example of the Modem Futura podcast I co-host with Sean Leahy—present an emerging and powerful way for experts to have relevance and impact at scale.

This, I argued, is because these parasocial relationships can draw back the curtain on the process of creating new knowledge, and help people feel they are connected to discussions and discoveries they would usually be excluded from.

It’s a concept that I practice in my own work, and still stand by. But Cambridge Dictionary has, I must confess, thrown a bit of damper over it.

Thanks!

The journey of parasocial from just another word to THE word started, according to Cambridge Dictionary, in June this year, when a thread on X around a YouTuber blocking their “number 1 parasocial” went viral—and led to a spike in searches for the word.1

Then in July, the release of Grok’s risqué anime AI chatbot companion raised concerns around the risks of unhealthy parasocial relationships with AI— concerns that have since spilled over to a growing number of AI applications including, most recently, in kids toys!

Following on from this, the Attorneys General of 44 US jurisdictions sent a letter to 13 AI companies in August that further fueled interest in the word and its connections to AI. The letter stated that:

“In the short history of chatbot parasocial relationships, we have repeatedly seen companies display inability or apathy toward basic obligations to protect children.” The letter was a plea to the companies to think far more carefully through the potential consequences of their actions, concluding with “If you knowingly harm kids, you will answer for it.”

Also in August, according to Cambridge Dictionary, “Global coverage of the way in which Taylor Swift announced her engagement to Travis Kelce caused lookups of parasocial to surge as the media dissected fans’ reactions.”

And then in September, driven by increasing associations between the word and AI, Cambridge Dictionary updated its definition of parasocial to:

involving or relating to a connection that someone feels between themselves and a famous person they do not know, a character in a book, film, TV series, etc., or an artificial intelligence (my emphasis)

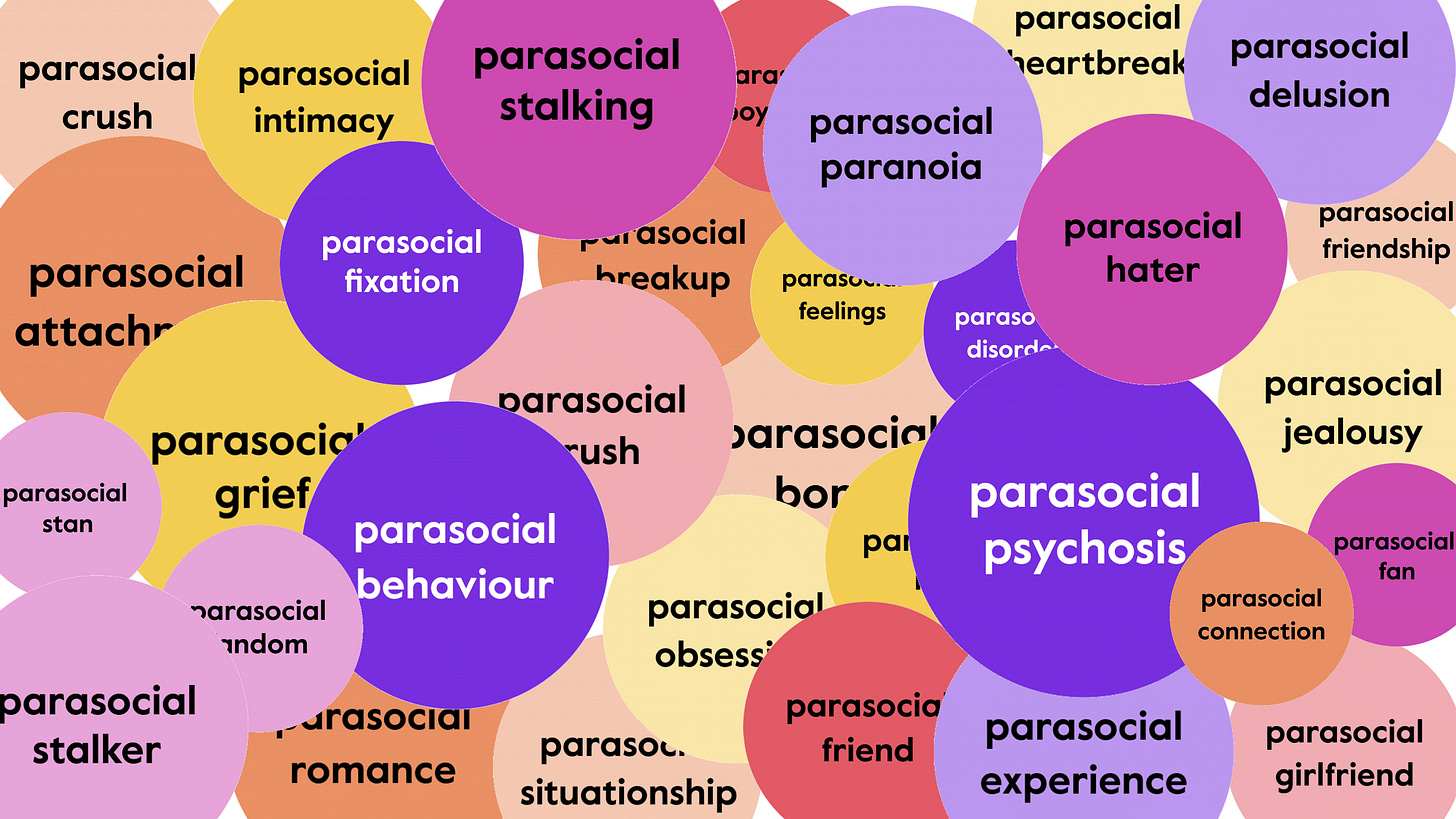

Through all of this, there are distinctly negative emerging connotations connected to the term parasocial. These are reflected in Cambridge Dictionary’s examples of how the word is being used in 2025, including “parasocial crush,” “parasocial breakup,” “parasocial obsession,” “parasocial paranoia,” and “parasocial stalker.”

Clearly I read the tea leaves wrong back in May!

And yet, despite these shifting associations, I would still argue that the idea of meaningful yet one-way relationships with people presents opportunities for academics—and experts more broadly—to scale their reach and impact in socially beneficial ways.

Of course, the question left hanging here—and one which I did not address in May—is how this extends to AI.

The reality here is that, over the past year, there’s been a massive upsurge in people developing parasocial relationships with AI bots and platforms—and in some cases this is being associated with extremely concerning potential risks such as mental and emotional health impacts, and even self-harm. And looking forward, I suspect that we’re going to see a continuing shift in “parasocial” being associated with some of the the more worrying impacts of artificial intelligence use.

This needs far more attention than it’s currently getting. But it also raises questions around whether parasocial relationships with AI could also be beneficial, if they are done right.

We’re already seeing a growing number of users whom, I suspect, would say that their relationship with their AI of choice enhances their life and wellbeing in unexpected ways. This extends from personal health advice—as was recently covered by the New York Times—to mental health and wellness support, and even mentoring and tutoring (and what are educational establishments doing with advanced agentic AI if not building bots that are designed to foster parasocial relationships with students.)

Whether these one-way human-tech relationships turn out to be healthy or unhealthy in the long run is still to be determined. But what is clear is that, despite slightly shady connotations, the association between “parasocial” and AI isn’t necessarily bad.

And, not surprisingly, I would argue that the same goes for parasocial relationships with people—as long as we understand what differentiates a healthy parasocial relationship versus and unhealthy one.

And maybe this is the most important takeaway from this year’s Cambridge Dictionary Word of the Year.

I must confess I had some trouble tracking down the source of this claim—probably because I don’t hang out enough on X—and at one point began to wonder if it was an AI-generated hallucination! But here’s the original thread on X where, at the time of typing, the initial post has attracted over 42 million views!

Oddly enough, at the HS teaching level, this term had a significant boost within my Journalism classes in early 2024. Continued within a new course I started teaching Sept 2024, AI and Ethics. It was purely based around fandom - often for musicians, certainly Taylor Swift and her constant mastery of parasocial brand marketing (at least the argument then was mastery, before Showgirl and what seems like the most widespread negativity I've seen towards Taylor Swift ever at the HS level)

Also, continued discourse about the possibilities of anyone in 2025 or later being able to create and sustain a brand as Swift's team has done through pretty much the lifetime of my students last year.

But parasocial without an actual person on the other end of the medium? Just electricity? Disembodied language? Or even LLM's within physical AI, like an Optimus robot?

Your original argument was and is fine. Thank you for writing and sharing it!

All these end-of-year pop-linguistic selections are 2010's attention economy holdovers, even feeling slightly like the last efforts push to grab attention through browsers before SLM's become the heartbeat of people's daily knowledge...or at least that's an effort the tech sector would like to see happen.

Looking forward to these larger shared experiences of phenomenology, cybernetics, embodiment leading hopefully to better shared experiences daily across society, too.