Spiky surfaces and jagged edges: Moving beyond what's known in an Age of AI

Do we need to move beyond simple models of knowledge creation and discovery as AI becomes increasingly capable?

Will AI ever be able to transcend the “knowledge closure boundary” — the conceptual barrier between what’s known (or what can be inferred from what’s known and understood) and what is not — and make truly novel and transformative discoveries?

There’s a growing debate around what might be possible here versus what lies in the domain of optimistic (and, according to some, fanciful) speculation — and much of this is grounded in different theories and ideas around how discoveries are made, and new knowledge generated.

I was reminded of this by my colleague Subbarao (Rao) Kambahmpati in a post on X this past week — and it got me thinking about how we conceptualize knowledge generation in an age of AI

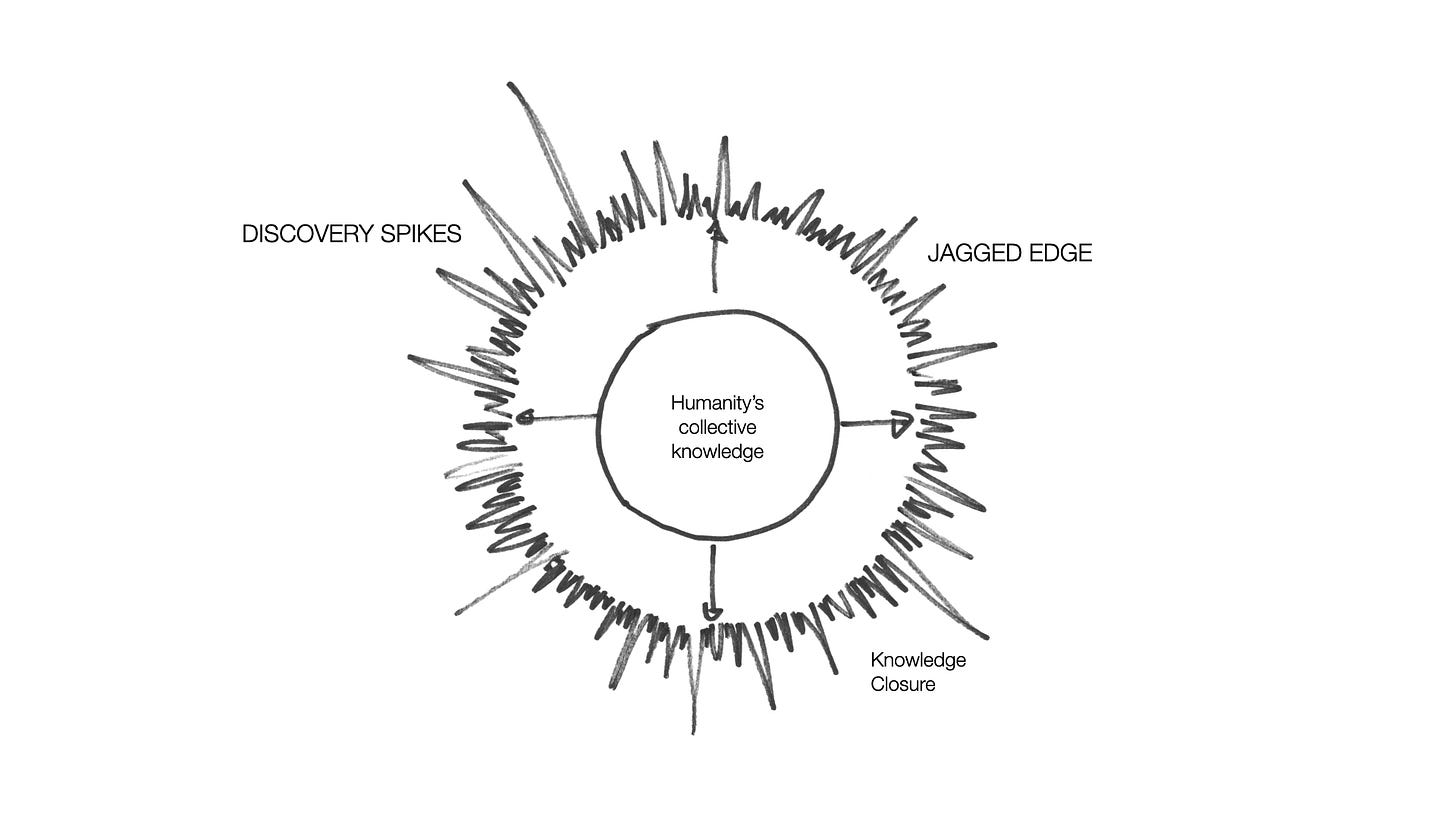

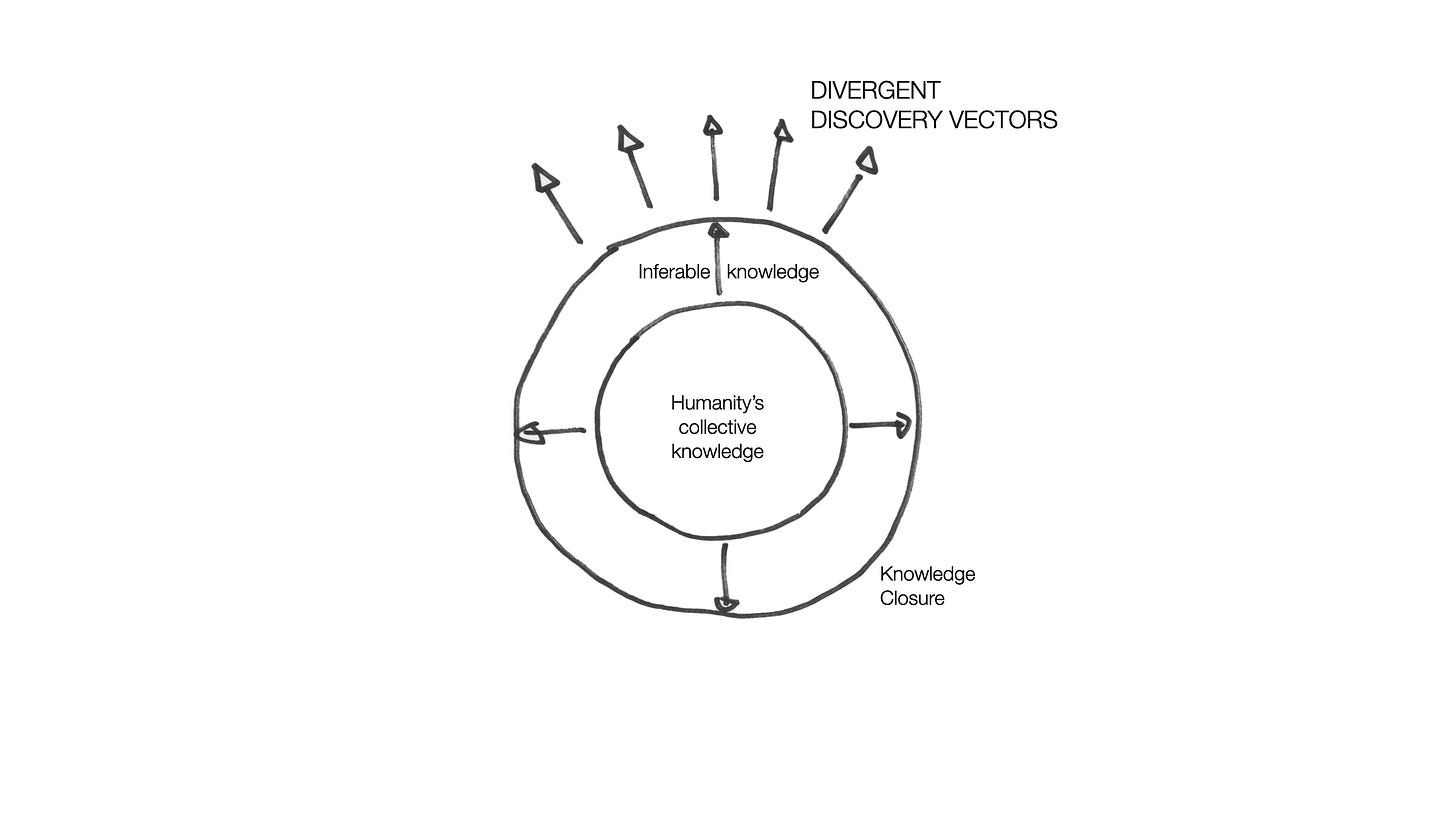

Rao neatly captured a perspective that’s widely held around new discoveries in the diagram above. At its core is the sum total of what we collectively know (if you want to get Rumsfeldian, the “known knowns”). Beyond this is the domain of knowledge that we can infer from what we know, including inference through combining what we know in new and interesting ways.

This is contained within a hard boundary (the knowledge closure boundary) — a boundary that cannot simply be transcended by novel combinations of what’s already known.

Rao’s argument — and that of many others in the field of AI — is that machines are incapable of unaided discovery beyond this boundary, at least without being embodied in a form where they can fully experience the physical universe and discover new things through “hands on” experimentation.

The diagram is a useful way of deflating some of the hype around AI-generated discovery (including speculations that artificial intelligence is somehow going to make the impossible possible within the next decade or so). And yet, I worry that it’s an over-simplification that potentially obscures what might indeed be possible as AI models become increasingly capable.

Part of my reasoning here is that there’s a long history of thinking around the nature of knowledge generation and discovery that suggests that things aren’t as simple as we might like to think — from the “paradigm shifts” of Thomas Kuhn, to the concept of the “adjacent possible” proposed by Stuart Kaufmann and popularized by Steven Johnson in his 2010 book Where Good Ideas Come From, and beyond.1 And while much of this thinking focuses on human-centric processes (remembering that we represent embodied and embedded intelligences that have the ability to learn through real-world experience and experimentation), it’s part of a body of understanding that suggests that discovery is complex, and not as well understood as we sometimes might like to think.

And so, spurred on by Rao’s post, I started playing around with what a conceptual diagram might look like that moves beyond the one he showed.

The resulting thought experiment is hopefully useful, if not necessarily that original. Ironically it draws on a lot of prior thinking and ideas, and so sits firmly in Rao’s core of collective and inferable knowledge. At the same time, it does suggest that there may be alternative ways of thinking about knowledge discovery in an age of AI that are insightful.

Starting with the Knowledge Closure diagram

This is perhaps unfair as I know Rao’s thinking is sophisticated here, but there are three aspects of the knowledge closure boundary as it’s presented in his post that sit uneasily with me:

1. It’s a divergent model of discovery

Taken literally, discovery at the “knowledge closure” boundary in this model is implied to be a vector that always points outward from the core of what’s known. And because the boundary is a circe, all discovery vectors diverge from each other.

I know that I’m pushing the diagram beyond what was probably intended. But the the very formulation of a smooth convex closure boundary with “discovery vectors” that always moves outward from a core of knowledge removes the possibility of complex, intertwined, multi-directional discovery pathways. And this conceptually limits how the model opens up thinking around what might be possible.2

2. It assumes a smooth knowledge closure front

The diagram in Rao’s post implies that the knowledge closure boundary is smooth and is pushed out at a uniform rate in all directions. This is another inference that I think is probably an over-interpretation. But it’s important, as how a model — even a simple one — is interpreted and applied, can influence thinking. And while I know that I’m being a little cheeky here, this does make it harder to think of the boundary as complex, messy and convoluted — all of which impact how we conceptualize knowledge generation and discovery.

3. It’s dimensionally constrained

Because the model is two dimensional, it limits thinking about how different forms of understanding and knowledge generation may influence and impact discovery. This becomes especially important when knowledge systems are considered outside of the natural sciences and engineering. But it also applies to knowledge generation and discovery across philosophically different STEM disciplines.

Of course, in highlighting these three concerns with Rao’s image, I’m intentionally setting things up for the following thought experiment. And as a result I’m being a little playful. But in addressing these three points, intriguing possibilities do begin to emerge.

And so on to the thought experiment:

Jagged Edges and Discovery Spikes

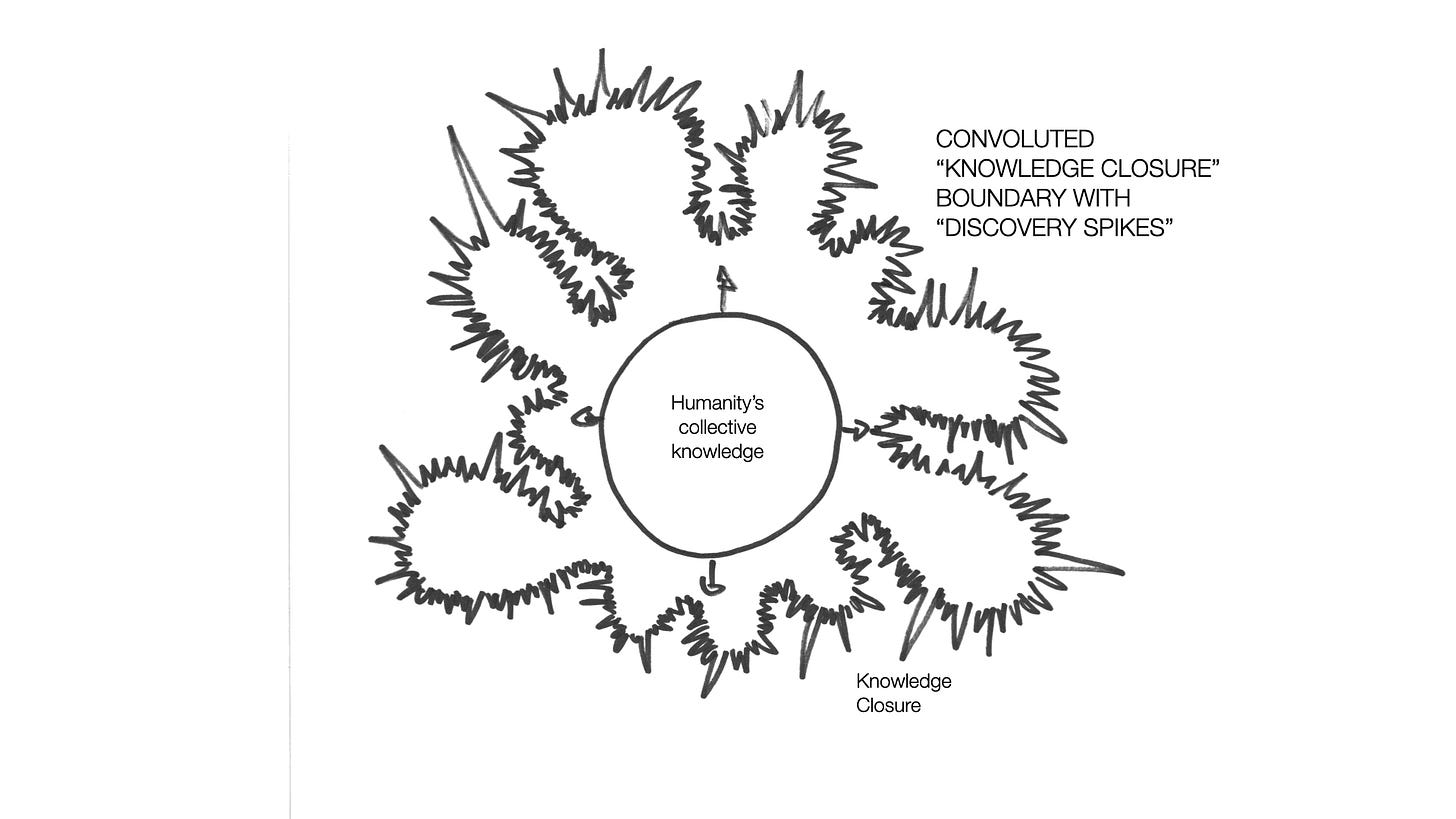

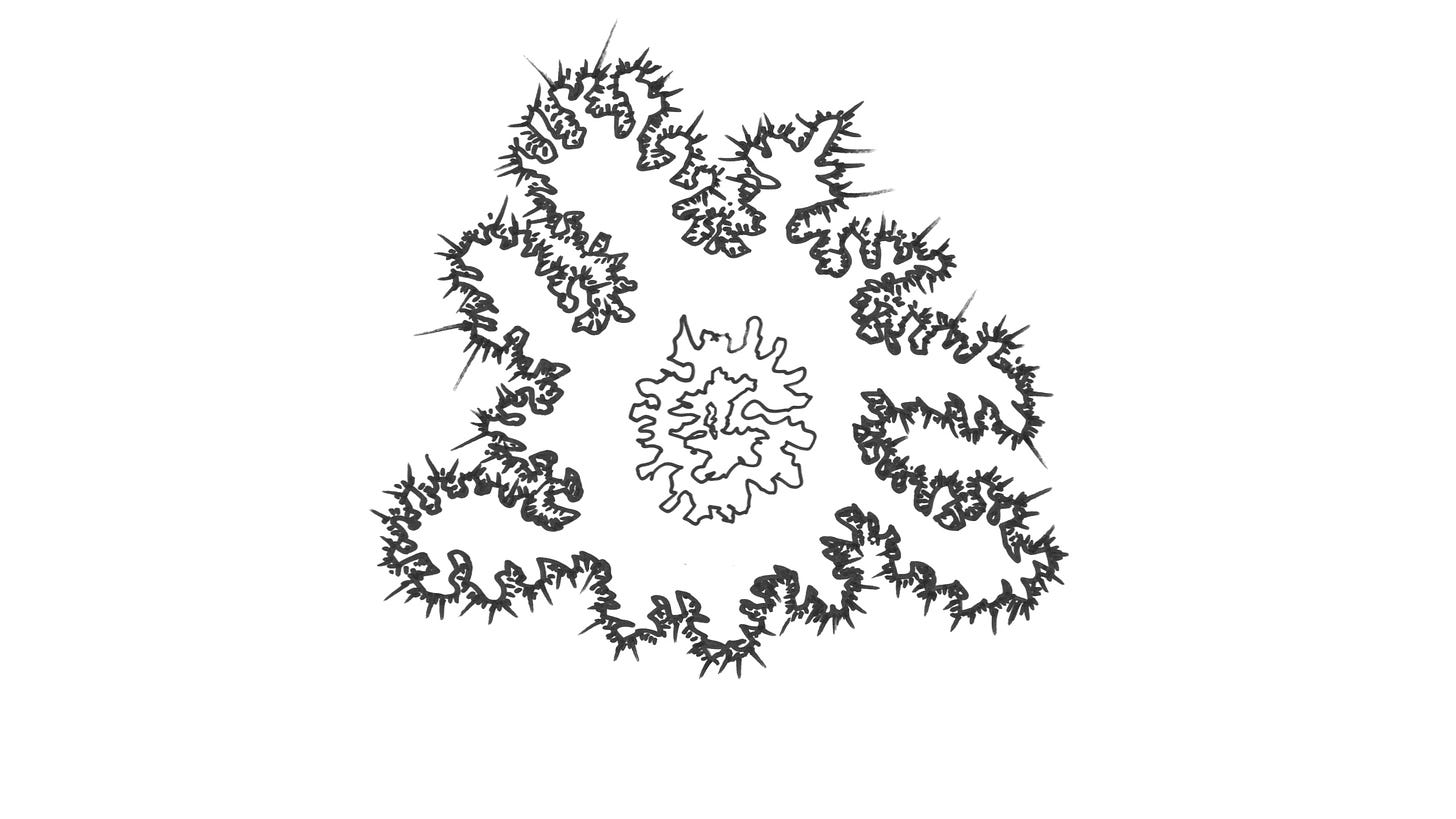

The first step in this thought experiment is to imagine that the knowledge closure boundary between what is known/inferable and what is not is not smooth and uniform, but convoluted; jagged, and “spiky” — an edge where discovery progresses at different rates, in different ways, and through different modalities.

This captures in part the emerging language around the “jagged edge” of innovation, where emerging capabilities and their adoption are not uniform through society. It’s a term that was seeded by Andrej Karpathy with the phrase “Jagged Intelligence,” but is increasingly widely used in the context of AI and tech innovation more broadly.

Knowing how discovery often works, I like the extension of the idea of “jaggedness” to “spikiness” as researchers and scholars push the bounds of what is known through their work.

I particularly like spikiness here (and the idea of “discovery spikes”) as it captures the idea of a highly uneven frontier of knowledge which is being pushed forward by individuals, small groups, large teams, and multinational initiatives — but all in very loosely coordinated (and sometimes totally uncoordinated) ways.

This move from smooth to spiky brings some nuance to the knowledge closure model. But it still represents discovery as a divergent vector process, moving from the central core to the outer unknown.

What, though, if the spikes don’t all point in he same direction?

Convoluted surfaces

In a 2009 blog post, marine geologist Kim Kastens suggested that the boundary of human knowledge is more “amoeba” like — a fuzzy, undulating boundary, where “pseudopods of known protrude into the unknown.”

This idea — which makes a lot of sense — can be extended to a knowledge closure boundary that is deeply convoluted — more so than in Kim’s sketch — and that also includes the aforementioned “discovery spikes.”

This relatively small expansion of the model leads to some quite profound possibilities. Because the surface is now both convex and concave, the direction of the spikes is no longer always divergent. And because of this, there’s the possibility of spikes from different parts of the surface coming close to each other — and even intersecting.

The result is the potential for recombinant discovery (combining knowledge from two or more spikes) that is differs from the inferable knowledge in Rao’s diagram. It’s still building on what is known — but in highly novel ways.

This visualization has overtones of what are typically referred to as paradigm shifts or step-changes in understanding — Einstein’s theory of relativity for instance, the theory of natural selection, germ theory, or the development of quantum physics. These spike intersections expand the universe of possibilities through unexpected and serendipitous discoveries that move beyond inferred knowledge or — possibly — experimentation-driven “embodied discovery.”

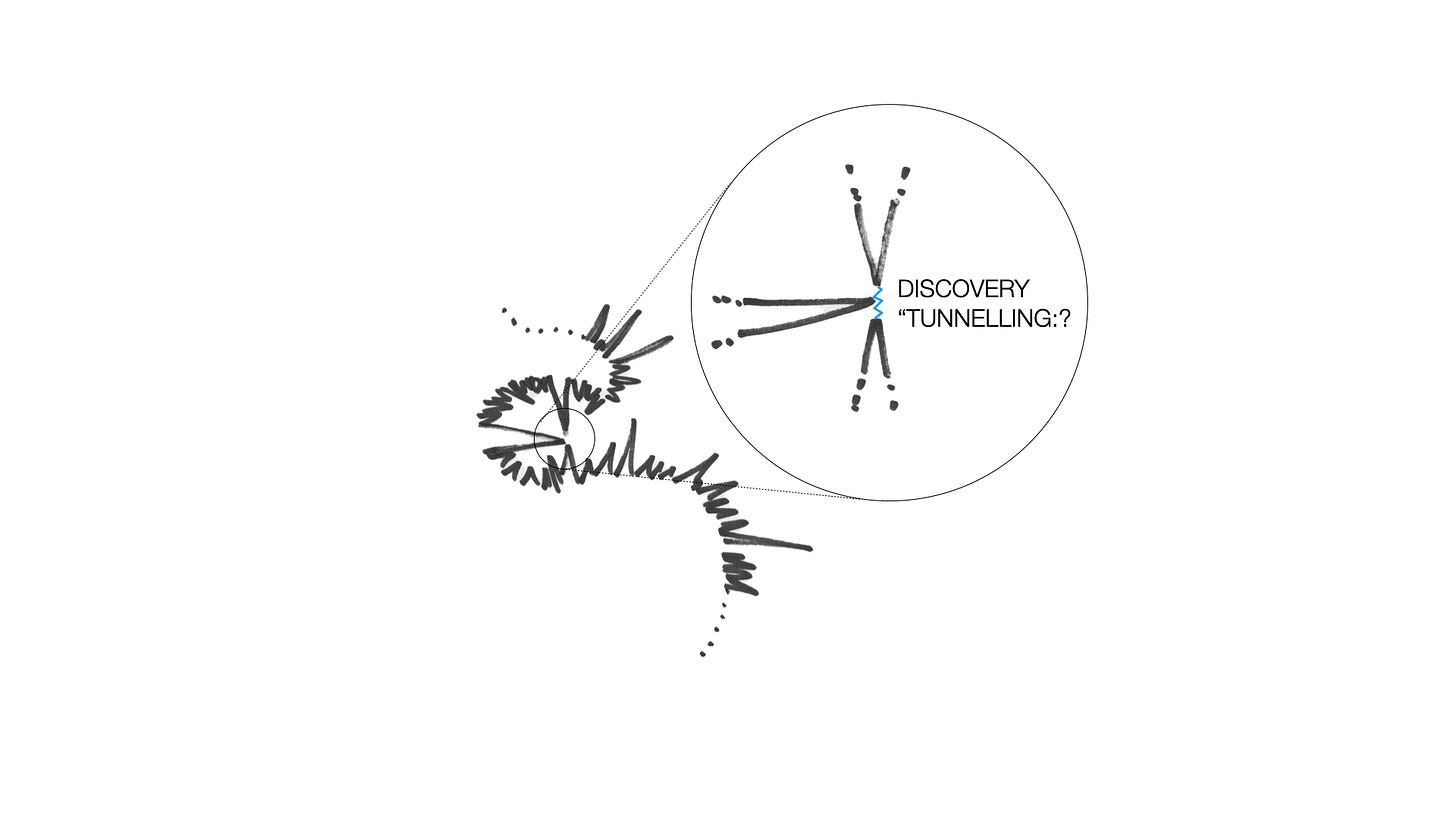

But there’s another aspect of this extension to the model that I think is interesting, and that is considering what happens when two spikes get close to each other, but don’t touch.

Is it possible that, in these cases, AI will be able to act as a catalyst or “barrier-thinner” that allows us to “tunnel” between them and generate knowledge that would not have been possible without it?

To be clear, we’re not talking about AI making new discoveries unaided here — not yet at least. But this conceptual model does allow us to think about how step-change discoveries might be catalyzed by AI in ways that are not captured in the standard knowledge closure model — and may open up ideas around “leaky boundaries” where closure is breached.

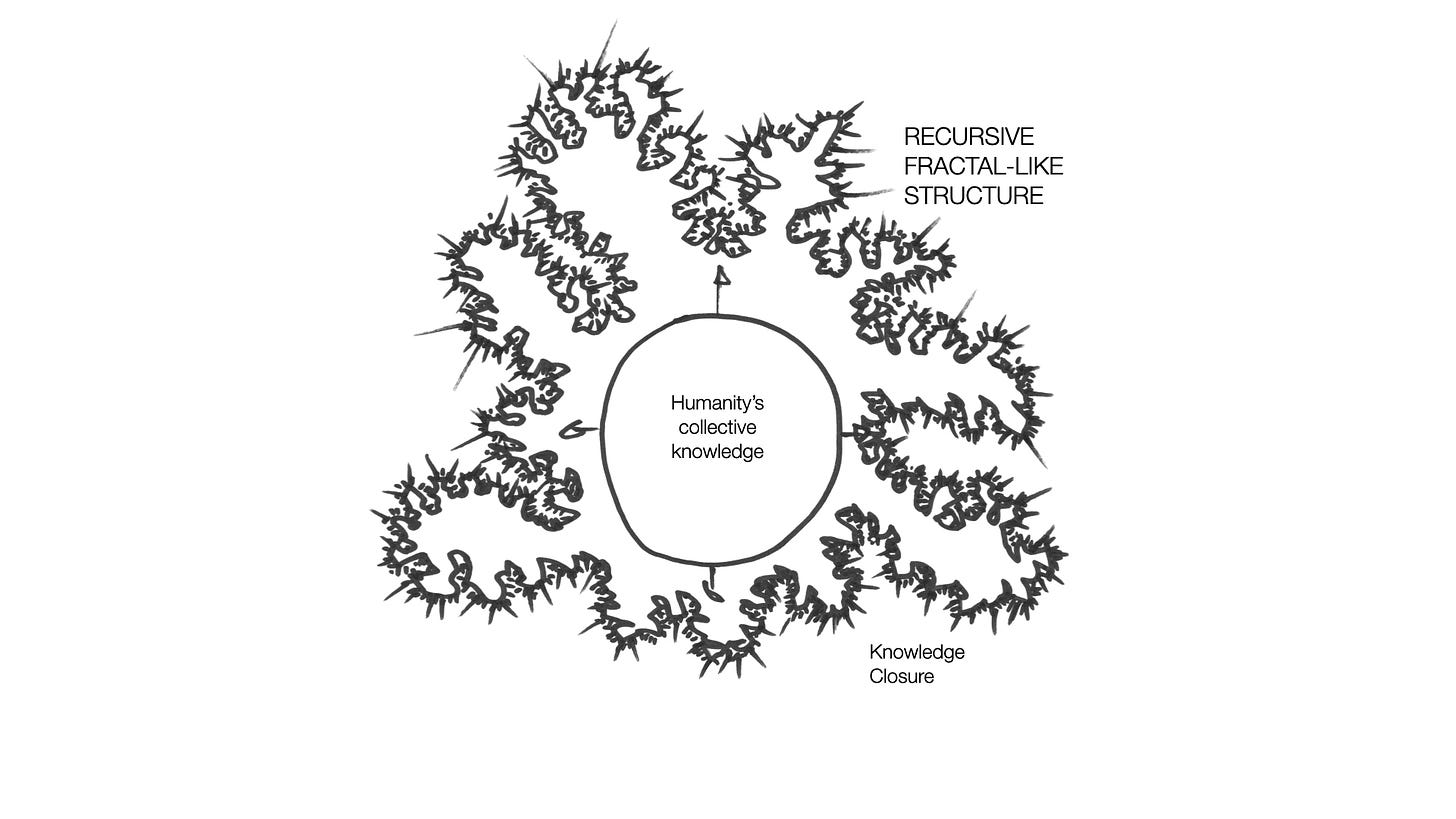

Fractal-like boundaries

From the convoluted boundaries above, it’s a small step to imagining that the knowledge closure boundary is not simply convoluted, but has recursive fractal-like structure — so the closer you look, the more convolutions and spikes you see.

Given the complexity of research, scholarship, and knowledge generation, this would seem reasonable. And it moves us further toward thinking about this edge of what is known as something that’s more complex and even dynamic than a hard boundary — and something where it gets easier to imagine human-AI actions, and maybe one day unaided AI, extending the boundary in unexpected ways.

Spiky fractals all the way down

So far this thought experiment has just dealt with the outer boundary between what is known and what is not. But what if this idea of a fractal-like spiky interface also applies to the boundary between known and inferred (or inferable) knowledge?

And why stop there? What if it’s spiky fractals all the way down?

This would make sense as, within research and scholarship, the lines are often blurred between what is known and what is not — and even what is known within one circle or community, and what is not.

If this is the case, what does this mean for AI-augmented discovery, or even human-out-of-the-loop AI discovery?

The short answer is that I’m not sure. But as interest grows in accelerating discovery through working with advanced frontier models, this conceptualization does open up ways of thinking that I suspect are likely to lead to new research and discovery models.

However, we’re still dimensionally constrained in this extension of the discovery diagram. What happens when we take the next step and break away from those constraints?

Multi-dimensional fractal spikiness

One of my concerns with the two dimensional model of knowledge closure is that it represents a rather narrow world view of knowledge and discovery — and one that is rooted in specific disciplines and domains. It might make sense for instance if you are a physicist or engineer, or a technologist. But things get a little muddier as you extend the model to other scientific disciplines. And when you add in the social science, the arts and humanities, and the many alternative ways of knowing that aren’t captured in the standard “academic world view,” the model begins to look rather limited.

However, rather than being constrained to two dimensions, what if we imagine the “spiky fractals all the way down” model to exist in n-dimensional space, where each dimension represents different scholarly and intellectual traditions, different disciplines, different ways of knowing and understanding, and more?

I’m not going to even attempt to sketch this! But a conceptual model begins to emerge where spikes at the boundary of knowledge within this multidimensional space now have the ability to cut across dimensions in potentially transformative ways.

To anyone who works across disciplines or is unbounded by conventional ideas around disciplinary expertise, this should feel familiar. There’s a wealth of experience around serendipitous discovery where very different ways of knowing and understanding collide. Yet this is not as widely recognized in science, engineering, and technology, as you might expect.

And even though this forms part of our tacit (and sometimes not so tacit) knowledge as a species, I wonder what the implications of the conceptual model of n-dimensional fractal-like spiky knowledge surfaces are to how we think about AI and discovery — or even just human discovery.

Is this even helpful?

Going back to the original catalyst for this thought experiment, it’s worth coming back to the question of whether any of this changes anything? Does this perspective on knowledge closure change how we might think about AI-driven discovery?

I suspect many will conclude that it doesn’t — and that’s fine, as the point of a thought experiment like this is to stimulate thinking and discussion rather than provide definitive answers. But if pressed, I think I would say that it does.

There’s nothing that’s foundationally new here — many of the underlying ideas have been explored to some extent by others. But when brought together, the “n-dimensional fractal-like spiky discovery model” does, I think open up new ways of thinking about how we develop new knowledge and understanding, how we might do this in different — and maybe more effective — ways, and how the rise of intelligent machines potentially changes things.

And this is important, because whether novel discovery truly is the domain of humans, whether it can be catalyzed through human-AI partnerships, whether future embodied AI will be able to emulate what many believe only humans are currently capable of, or whether it turns out that even disembodied AI one day will have the capacity to make and recognize discoveries that are beyond our ken, one thing is certain: Emerging AI models are challenging our understanding of knowledge and discovery.

And to ignore this is likely to lead to missed opportunities at best, and dangerous blindsides at worst.

This is a whole article in itself — I resisted the temptation to go all academic here with a litany of references and citations, but suffice to say that philosophers and others have grappled with the nature of knowledge and understanding for centuries.

I’m afraid you have to put up with my rather crude hand drawings here. Of course I could have used AI. But the reality is that AI image generators are good at fancy and bad at precision, in that at best they with typically only approximate what you are looking for — and that on a good day. This is fine if you are not fussed what you get. But I needed sketches that matched what’s in my head, and so I decided to go “artisanal” and sacrifice fancy for precision. Sorry!

« Will AI ever be able to transcend the “knowledge closure boundary” » -- As long as the AI in question is Turing-complete, my answer is "yes". To argue otherwise requires taking the position that there is something about the computation that happens in human brains that is beyond the physical. That creativity is magic. For me, the world is simpler: a computable function is a computable function.

On a related note, you might enjoy the results in this paper: https://arxiv.org/abs/2507.18074

These ideas deeply resonate with my efforts to integrate understanding across academic domains, which failed due to a number of issues. I am an interdisciplinary researcher, no longer part of Academia.... and my fields of 'expertise' are extremely diverse, but include material science, intelligent systems, modeling & simulation, information visualization, complexity, system dynamics, and I'm a creative artist, adventurer, and grandmother... I was designing curriculum for teaching computing to art & technology students as a PhD effort...when a complex family trauma event happened...7 years ago...which triggered childhood trauma. I studied models of consciousness and have been able to 'flip my beliefs' and 'heal from trauma'.......based on my understanding and included these ideas in my curriculum, including teaching neuroscience of kindness and branching narrative stories for animators and game designers. If we consider the universe as a fractal unfolding, learning skills to integrate 'negative feedback'....while pushing forward to gain new experiences based on subconscious 'fears'....can allow for developing personal momentum and a trajectory of wisdom...where intentional exposure to novel experiences can guide neuroplaciticty to search for and understand connections across domains....but giving up on the illusion of control and trusting that one can use AI systems to help integrate and present insights. It's an interesting journey and I hope to help inspire more interdisciplinary questions about the nature of relationships between knowledge, understanding and wisdom that you are considering. Reach out if you'd like to chat.