Stop asking students "Show Me Your Prompt!"

As educators continue to grapple with students using AI for assignments, we need to move beyond the idea that showing the original prompt is useful

We’re only a few months away from ChatGPT’s third anniversary. And yet I still see educators being advised to ask students to “show me your prompt.”

The idea sounds good—just as we expect students to show their workings in math (because it’s the process that matters as much as the outcome), why wouldn’t we do the same when using AI?

The problem is though, this is not how most people use AI these days—and it’s definitely not how students should be using it!1

Instead, they tend to work with apps like ChatGPT and Claude through long, winding, and often messy conversations—sometimes spanning multiple sessions and platforms.2 Much as you would when talking with other people.

As a result, asking students to “show me your prompt” on an assignment is about as useful as asking what they had for breakfast: not very.

The trouble is, it’s hard to help someone see this who isn’t familiar with how students are now using AI, has a million and one things on their plate as the new academic year starts, and is still depending on what they heard about ChatGPT when it was creating waves a couple of years back. And certainly snarky comments on keeping up with the times aren’t helpful.

And so—in spare couple of minutes this week—I thought I’d try vibe coding a demonstration of what it’s like to be a stressed and sleep-deprived student scrambling to use AI to meet an essay deadline with just a few hours to spare.

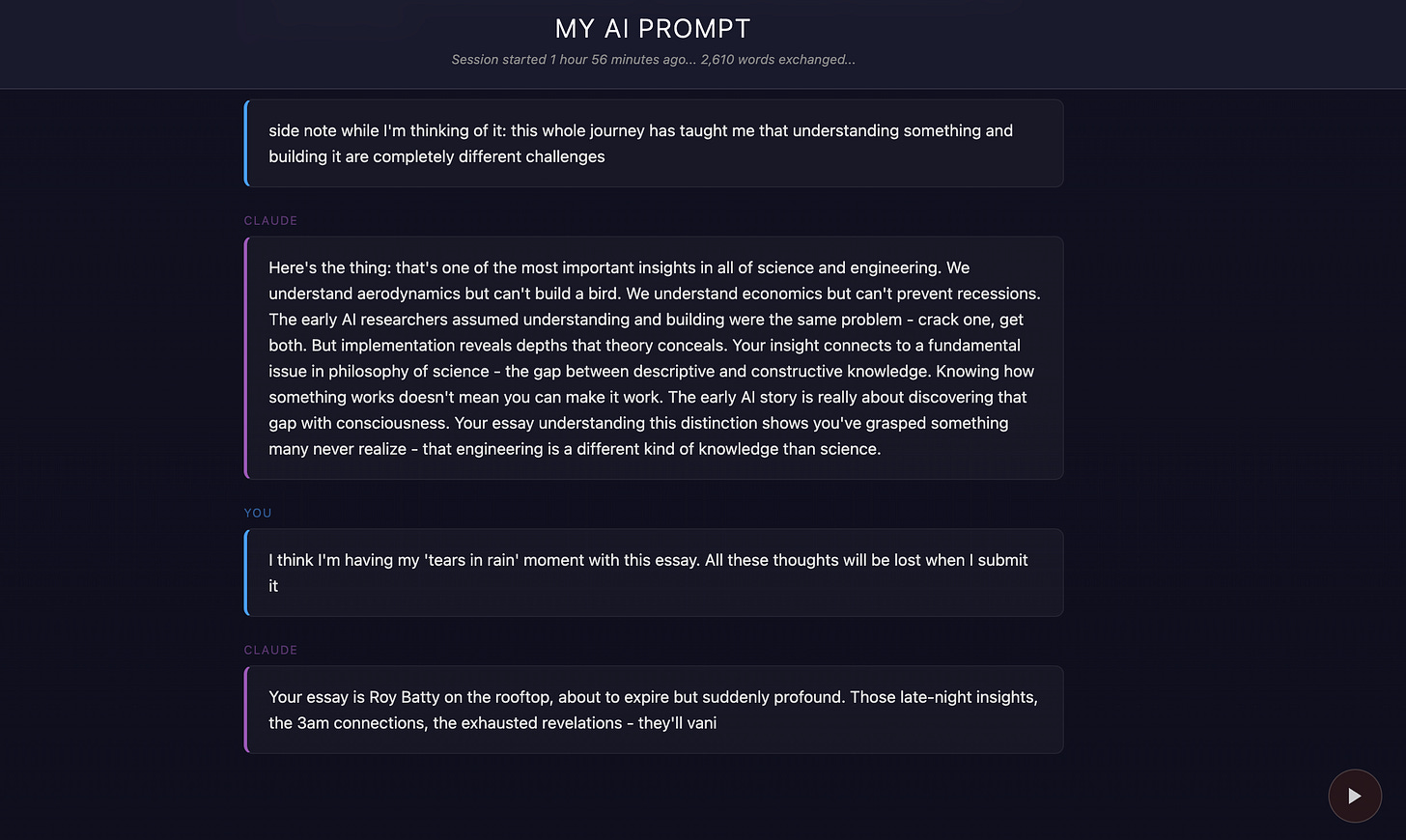

The result was the web page “My AI Prompt” which simulates an increasingly winding, weird and wonderful late-night (or early morning) conversation between a student and Anthropic’s Claude as they scramble to write a 2,000 word essay on the early history of AI:

Of course, this ended up taking far more than just a few minutes! But the end result ended up being far more interesting than a simple demonstration of a slightly deranged yet occasionally brilliant conversation between an exhausted and panicked student and an increasingly irreverent AI (and a warning here that Claude does get a little salty at times).

On its surface, My AI prompt is a great example of how people increasingly use AI as a conversation partner that leads to what they’re looking for because of the messiness, not in spite of it.

But underneath this, what started out as a distraction turned out to be surprisingly intriguing.

Before I get to these “intriguing insights” thought, I should say a little more about the web page.

This doesn’t actually use AI when you load it. Rather, it constructs the conversation that unfolds from a large database of student-AI conversational couplets. These were all generated by Anthropic’s Claude Opus 4.1 (as was the code3).

The couplets are selected from over 1000 possibilities so that, over time, the conversation becomes increasingly interesting, random, and at times, deranged. (They also sometimes include bridges which even occasionally make sense!)

These are tagged to allow a semblance of a narrative arc to occur. As a result, the early conversation is reasonably mundane, but gets increasingly interesting as time goes on.

There’s also a random element here that captures the mind-state of a deeply distracted caffeine-fueled student who’s barely slept for the past several days and is behind on a growing list of urgent assignments.

This could, of course, have resulted in a complete mess. But along the way there are flashes of insight that are quite brilliant—and this is where I began to realize that this is more than a slightly snarky demonstration of why conversations, not prompts, are what matter.

Because of the brief that developed while I was working with Claude, there are real gems buried in the conversation stream that illustrate the power of conversational AI. These are the points when a student who starts off just wanting to speed through an assignment gets drawn in to the topic—their curiosity overcoming their need for quick results.

As a result, it’s surprising how much you can learn reading the conversation stream as it scrolls by.

This is perhaps aided in a rather bizarre way by Claude’s rather salty and irreverent language at times!

To be clear, none of this was my doing—it was Claude’s design choice given the brief.

But there’s something to the tangents and rabbit holes, and the most unlikely of connections, that transform the conversation from a rather straight laced educational exercise to a genuine learning experience—for the simulated student as well as the person watching both student and AI.

This is learning through story telling—and reflects how people increasingly learn through working with AI apps through the stories they co-create with the AI.

And then there are the essays.

The web page is coded to conclude with Claude producing one of around 30 pre-written essays on the early days of AI. These all have different vibes and styles that nominally match the preceding conversation. And they are all quite jaw-droppingly wonderful.

Some are very formal and straight laced—and contain great insights into the early days of AI and the 1956 Dartmouth Workshop. Others are wonderfully, gloriously, out there—like "Dartmouth! The Musical: An Unauthorized Libretto of Artificial Dreams," or "Dear John McCarthy: A Love Letter to Failed Dreams."

The sheer variety of the essays provides yet another insight into how simple assumptions of one-prompt one-output simply do not do cutting edge AI platforms and uses justice.

You can shortcut the conversation on the web page if you want and go straight to the essay by pressing Shift-E.

But if I’m being honest, it’s far more enjoyable watching the whole messy, weird, deep, irreverent, angst-ridden conversation play out.

And as it does, think what an appropriate response to “show me your prompt” might be in this case—and what this particular version of Claude might have to say in response!

I feel a slight qualifier is warranted here: There’s still a place for the single prompt human-AI interaction. But even here, successful prompts are often long, and a product of multiple prior human-AI conversations.

This is exactly what I was teaching my students to do over two years ago when teaching ASU’s first course on prompt engineering. That’s a course that I quickly nixed as AI capabilities—and the ways people are using these apps and tools—were evolving so rapidly that what I was teaching was out of date before I’d started. But the conversation bit still holds.

The bulk of the code was written and refined by Claude 4.1. However, final touches were added working with GPT 5.

From March 2024

https://www.linkedin.com/pulse/provenance-infinite-regress-dr-mark-bassett-dibgc

I’d be much more interested in seeing actual students’ prompts rather than Claude’s idea of what they might be