The hidden risks of using AI to write emails

When is it OK to use AI to craft emails, and when do you risk stepping into a whole lot of unexpected hurt? Intrigued by the question, I brushed off my risk hat and dived in.

Imagine you email a colleague with a suggestion, and the response you get is personable (“thank you for your enthusiasm …”), empathetic (“I appreciate that you’re eager to contribute …”), affirmative (“it’s clear you bring valuable experience and energy to the office …”), but also reminds you of your place (“that said, I want to be clear about how we handle …”).

Now imagine that, at the end of the email, you see this line:

“Would you like me to make the tone slightly sharper (more “firm correction”) or slightly warmer (more “mentorship with a gentle reminder”) depending on how you want this person to walk away feeling?”1

It’s a scenario that seems to make headlines with some regularity these days where lawyers, academics and others inadvertently copy and paste AI-generated text into papers and reports—without noticing they’ve also pasted part of the model’s meta-response.

Professional cut and paste jobs like this are embarrassing but don’t directly impact most of us directly. When missteps like this occur in emails though—especially personal emails—the fallout can be considerable. And it potentially jeopardizes both the sender and the organization they’re a part of, as well as impacting the recipient.

Despite this, there’s a growing trend in using AI to craft emails within professional settings. This isn’t necessarily a bad thing as AI be useful here in a number of ways. The trouble is though that, without clear guidelines on how and when to use AI in email communications—and when not to—there are potential risks that can get serious pretty fast if not managed effectively.

And while there’s a lot known about institutional communication dynamics, and some work that’s been done on AI-mediated communication, there’s surprisingly little been written about how to avoid serious AI email mishaps.

So I thought I’d dig out my risk hat and dive a little deeper—including developing a simple risk model.

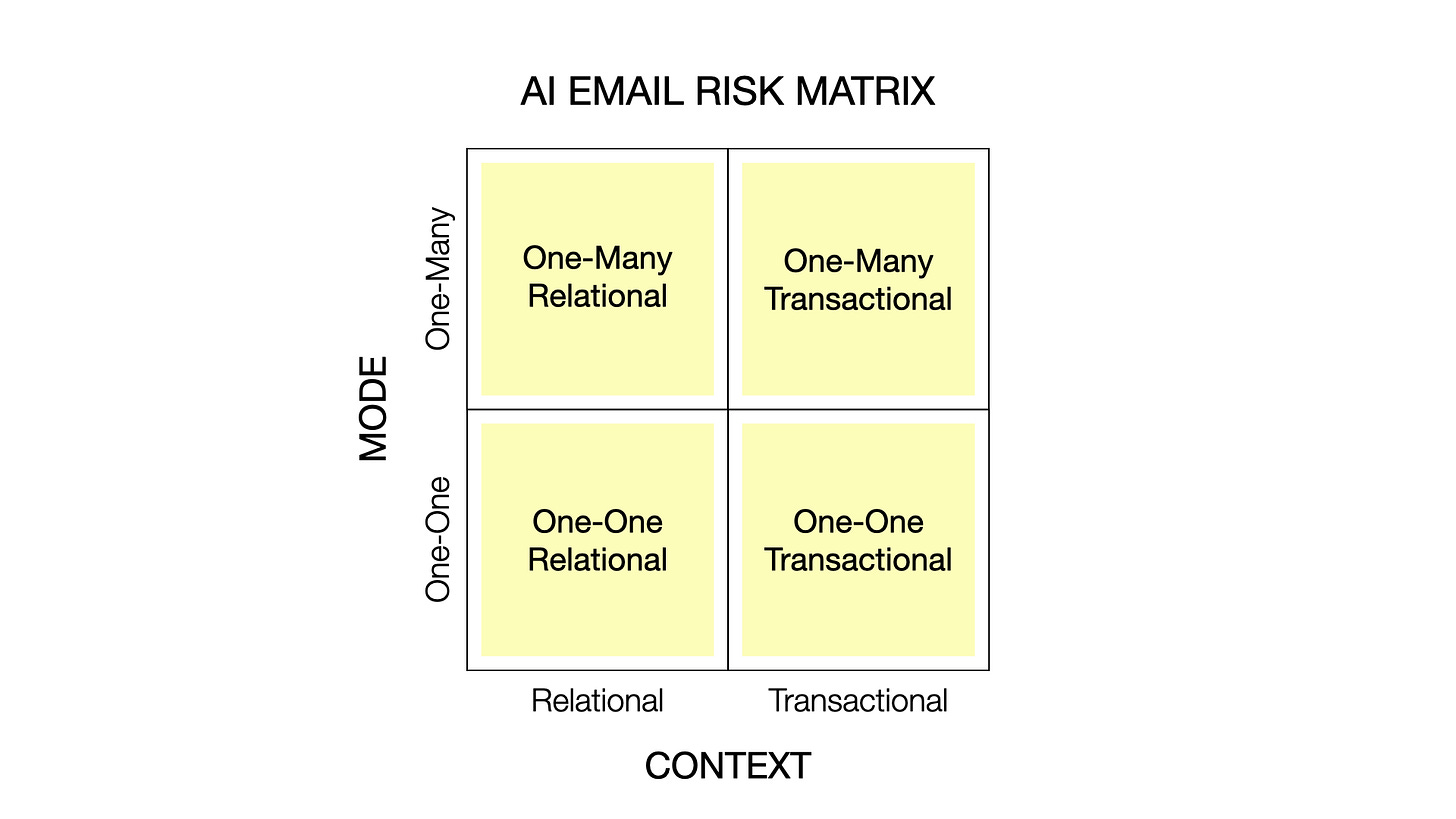

A Four-Quadrant Approach to Assessing and Navigating AI Email Risks

Before diving into the full risk assessment, I wanted to describe the framework and the process. But if you’re eager to cut to the chase, the assessment can be found below this section.

To get a handle on potential risks of using AI to craft emails within organizations, I constructed a basic 2x2 array that considers the context of communication (relational or transactional), and the mode of communication (one-one or one-many). This was then used as the framework for an exploratory analysis into potential risks.

The driving narrative I used in the analysis was of a supervisor, director or similar using AI to craft an email response to a colleague, employee, mentee, student etc. (or a community of such), and assessing potential risks in terms of:

Level of AI use;

Whether the email was within a relational or transactional context; and

Whether it was a personal email (one-one) or a broadcast email (one-many).

Within this context, I explored potential risk levels associated with four scenarios:

A baseline scenario where 50% of the email response was crafted by AI.

A light touch scenario where the writer drafted the email response and AI only provided around 5% to the final version—essentially helping polish it.

A heavy-use scenario, where the writer offloaded virtually all the work to an AI app, resulting in around 95% of the response being crafted by AI.

A heavy-use scenario as above but with an inadvertent reveal of AI use, as in the example I started this post with.

For each scenario, four potential risks were identified for each of the quadrants: 16 in all. These were intentionally developed iteratively with ChatGPT to reduce potential biases I brought to the process.

The 16 quadrant-based risks were:

Relational • One‑to‑One Quadrant

Trust erosion — Recipients doubt the sincerity of praise, care, or feedback; future messages are read with skepticism.

Perceived manipulation — Polished “empathetic” wording feels engineered, prompting defensiveness or pushback.

Reputation cost — The sender’s personal standing suffers (seen as lazy, insincere, or out of touch), reducing influence over time.

Authority delegitimization — Formal authority carries less weight; guidance is questioned, escalated, or ignored.

Relational • One‑to‑Many Quadrant

Cultural tone deafness — Generic or euphemistic language misses context or groups, creating avoidable offense and grievance.

Reduced morale — Messages meant to unite feel hollow, weakening belonging and discretionary effort.

Perceived disengagement — Recipients infer leadership outsourced emotional labor; symbolic leadership capital declines.

Credibility loss — The institutional voice is trusted less; subsequent missives face lower uptake and more scrutiny.

Transactional • One‑to‑One Quadrant

Impersonal tone — Over‑formal or canned phrasing strips warmth from simple exchanges, cooling day‑to‑day cooperation.

Error propagation — Small inaccuracies (dates, links, steps) spread into delays, rework, and follow‑up churn.

Over-dependence — Routine notes appear delegated to automation, signaling inattentiveness to details.

Tone miscues — Mis‑signals of status or intent (too curt, too stiff, or overly familiar) create avoidable friction.

Transactional • One‑to‑Many Quadrant

Inaccuracy at scale — A single wrong detail becomes the “official” version, misdirecting many and requiring costly corrections.

Authority dilution — Auto‑sounding broadcasts weaken the sense that directives are considered and binding.

False efficiency — Speed gains hide skipped reviews; fixes arrive later as bulk clarifications or policy clean‑ups.

Tone mismatch — Bureaucratic or robotic wording reduces clarity and urgency, lowering compliance and response rates.

Working with GPT-5 pro, assessments were carried out for each scenario, and a risk score from 0 (negligible) to 10 (catastrophic) assigned to each risk—together with an overall estimated risk score for each quadrant. Again, the use of AI here was important to ensure that the analyses did not simply reflect my own perspectives and biases.

Finally, the descriptions of each risk, and the summaries for each quadrant, were refined through a combination of ChatGPT providing a first draft, my edits, Anthropic’s Claude editing further to ensure clarity, and a last editorial tweak by me. Again, the use of AI in the process was intentional to ensure that these descriptions did not unduly reflect my own perspectives.

So, What Are The Potential Risks?

Below I go through each of the four scenarios in turn. In each case the assessment is carried out by GPT-5 Pro within the AI Email Risk framework. These are generic and intended to cover use of AI in crafting emails across for-profit and not for profit organizations (including government agencies and educational establishments). A more sophisticated analysis would focus on specific organization types, structures and cultures. But the below is still a useful starting point.

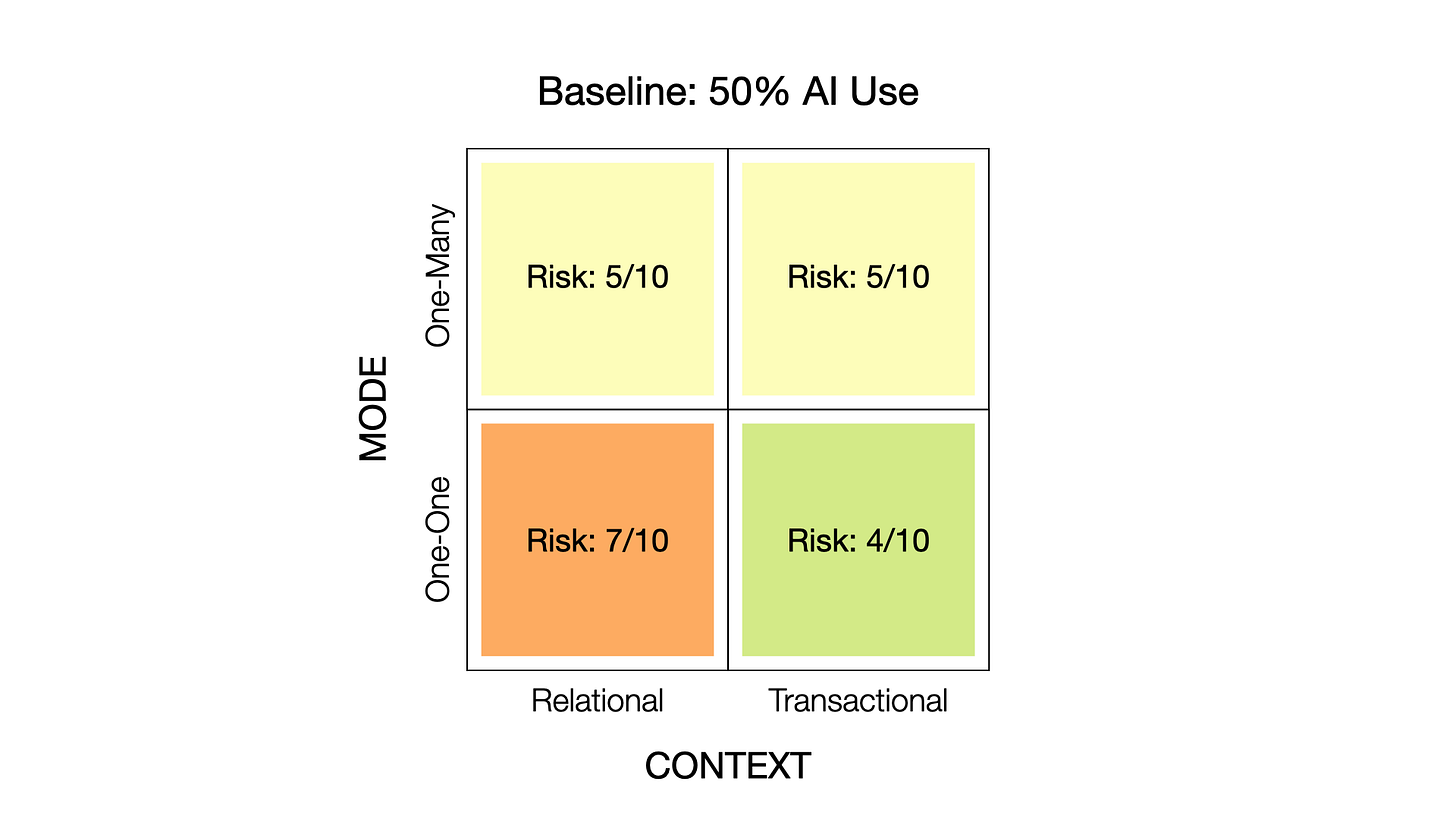

Baseline: 50% AI use

A baseline scenario where 50% of the email response is crafted by AI.

Relational • One‑to‑One (Overall 7/10)

Trust erosion — 7/10. When half the message is machine-written, praise and feedback feel unearned, making recipients read future messages with skepticism.

Perceived manipulation — 6/10. Overly polished “empathetic” language feels calculated, triggering defensiveness or pushback.

Reputation cost — 5/10. The sender appears lazy or insincere, gradually eroding their personal standing and informal influence.

Authority delegitimization — 6/10. Formal authority loses its weight as guidance gets questioned or simply ignored.

When half the reply is machine-written, subtle voice inconsistencies can make care or feedback feel prefabricated. Recipients may suspect they're being “managed” rather than genuinely heard, gradually eroding both trust and respect.

Relational • One‑to‑Many (Overall 5/10)

Cultural tone deafness — 5/10. Generic language misses contextual nuances, inadvertently causing offense.

Reduced morale — 5/10. Messages intended to inspire unity feel hollow, weakening team cohesion and commitment.

Perceived disengagement — 5/10. Staff sense that leaders have outsourced the emotional work, diminishing their symbolic leadership capital.

Credibility loss — 5/10. The institutional voice loses trust, with future messages receiving less attention and more skepticism.

A co-authored tone risks sounding mass-produced, particularly in culture-building messages. Recipients notice what's missing—the euphemisms, the omissions—and conclude leaders aren't truly engaged. Over time, broadcasts lose their persuasive power, requiring increasing follow-up and clarification to achieve the same alignment.

Transactional • One‑to‑One (Overall 4/10)

Impersonal tone — 3/10. Overly formal or canned phrasing gradually erodes everyday workplace cooperation.

Error propagation — 3/10. Small inaccuracies potentially lead to cascading miscommunication.

Over-dependence — 4/10. Routine messages appear automated, suggesting the sender isn't paying attention to details.

Tone miscues — 3/10. Mixed signals about status or intent (too formal, too casual, etc.) create unnecessary friction.

Half-AI replies usually function adequately, but small inaccuracies and tonal inconsistencies create micro-frictions. When overused, colleagues start reading even trivial messages as automated, questioning the sender's attention to detail. The cumulative effect: extra back-and-forth to confirm basics and slower coordination on routine tasks.

Transactional • One‑to‑Many (Overall 5/10)

Inaccuracy at scale — 5/10. A single error becomes the “official” version, requiring time-consuming corrections across the organization.

Authority dilution — 5/10. Messages that sound automated weaken the perception that directives are thoughtfully considered and binding.

False efficiency — 5/10. Initial time savings mask the lack of proper review, with corrections arriving later as bulk clarifications.

Tone mismatch — 4/10. Bureaucratic or robotic language reduces clarity and urgency, decreasing compliance rates.

When half-authored by AI, broadcast logistics risk drifting from the source of truth while the voice feels automated. Recipients discount urgency and wait for clarifications, causing corrections to ripple outward. The result: messages are fast to send but slow to land, with apparent efficiency masking downstream confusion.

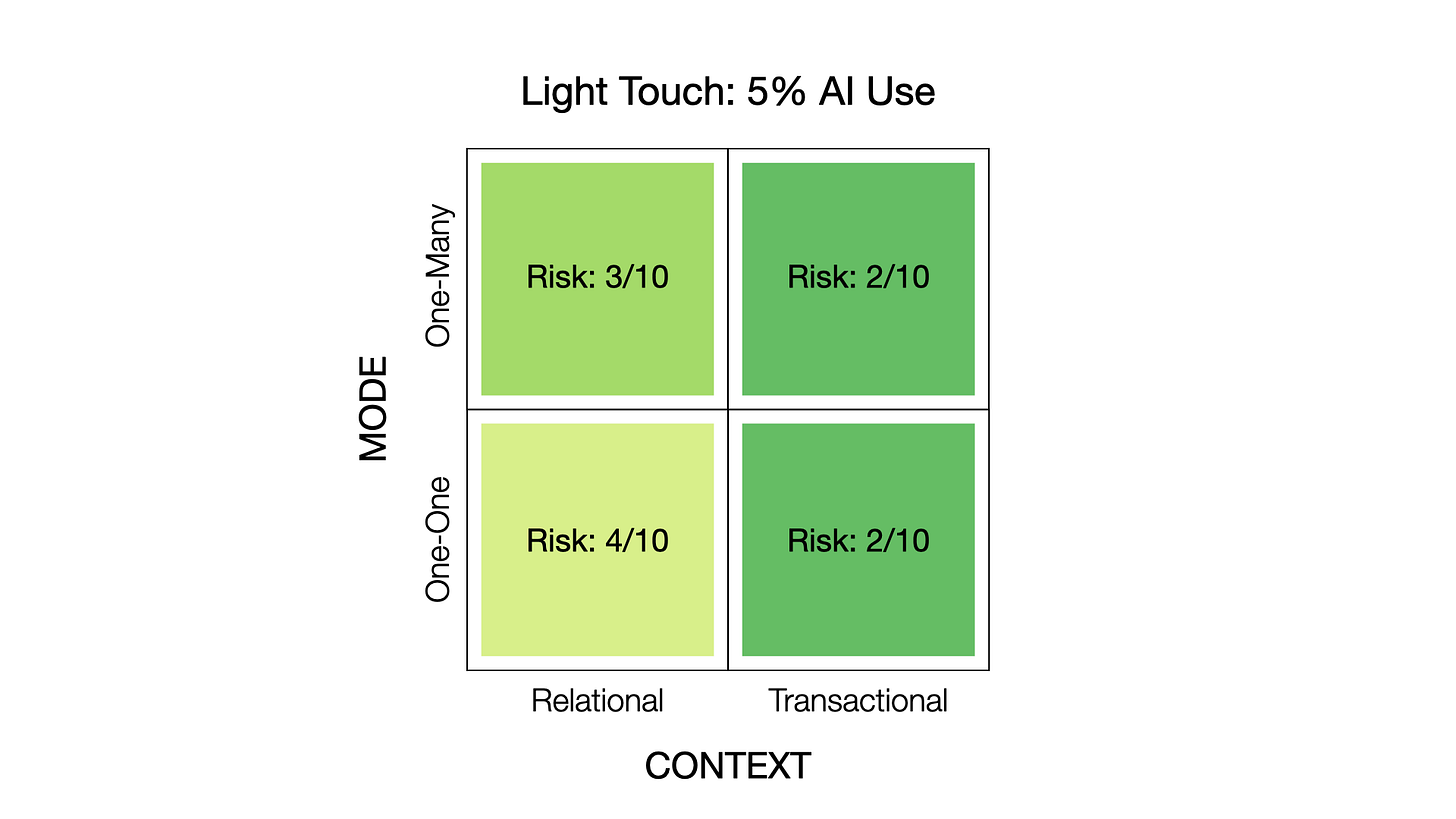

Light Touch: 5% AI Use

A light touch scenario where the writer initially drafted an email response and AI only provided around 5% to the final version—essentially helping polish it.

Relational • One‑to‑One (Overall 4/10)

Trust erosion — 4/10. Light polishing can subtly shift voice, potentially making recipients question the sender's genuine engagement.

Perceived manipulation — 3/10. Unexpectedly smooth phrasing compared to past communications can feel calculated rather than sincere.

Reputation cost — 3/10. Consistently “perfect” replies begin to read as formulaic, gradually diminishing personal standing.

Authority delegitimization — 3/10. On sensitive topics, guidance gets second-guessed when the voice sounds templated rather than personal.

At 5% use, risks remain modest but real in one-to-one relational exchanges. Minor voice shifts can feel canned, sparking subtle skepticism about intent and care. With repetition, recipients begin discounting the sender's judgment, slowing decisions that need a clearly human, contextual response.

Relational • One‑to‑Many (Overall 3/10)

Cultural tone deafness — 3/10. Small wording choices potentially exclude groups or sanitize events—low probability but highly visible when it happens.

Reduced morale — 3/10. Over-polished messages lose the warmth they're meant to convey.

Perceived disengagement — 3/10. Recipients sense leaders prioritized surface polish over genuine presence.

Credibility loss — 3/10. Future broadcasts face increased skepticism and require more explanation to land effectively.

Light editing usually helps, but can neutralize authentic voice in culture-setting messages. When phrasing feels generic or sanitized, people sense leadership distance, dampening morale and weakening buy-in. The effect is subtle: messages still send, but they persuade less and require more follow-up to stick.

Transactional • One‑to‑One (Overall 2/10)

Impersonal tone — 2/10. Grammar-perfect replies can feel stiffer than recipients expect from colleagues.

Error propagation — 2/10. Rephrasing occasionally shifts meaning in unintended ways.

Over-dependence — 1/10. Occasional polish is fine; constant use for trivial notes suggests inattentiveness.

Tone miscues — 2/10. Polishing can introduce unnecessary formality or terseness, creating minor friction.

Risks are minimal; clarity and speed typically improve. The main downsides are micro-frictions: slightly stiff tone or details altered in rephrasing. If every trivial exchange gets polished, colleagues may question attention to detail, prompting confirmations that negate time saved.

Transactional • One‑to‑Many (Overall 2/10)

Inaccuracy at scale — 2/10. Copy-edits rarely change facts, but last-minute rephrasing can accidentally alter factual information.

Authority dilution — 2/10. Templated style slightly reduces a sense of urgency and compliance.

False efficiency — 2/10. Relying on polish instead of review potentially pushes fixes downstream.

Tone mismatch — 1/10. Bureaucratic cadence can blunt urgency, though risk is typically minor.

With careful human drafting, broadcast risk at 5% is minimal. Potential issues include subtle factual drift or an “auto-message” voice that reduces urgency. A quick human review of facts and tone usually eliminates these risks while preserving efficiency.

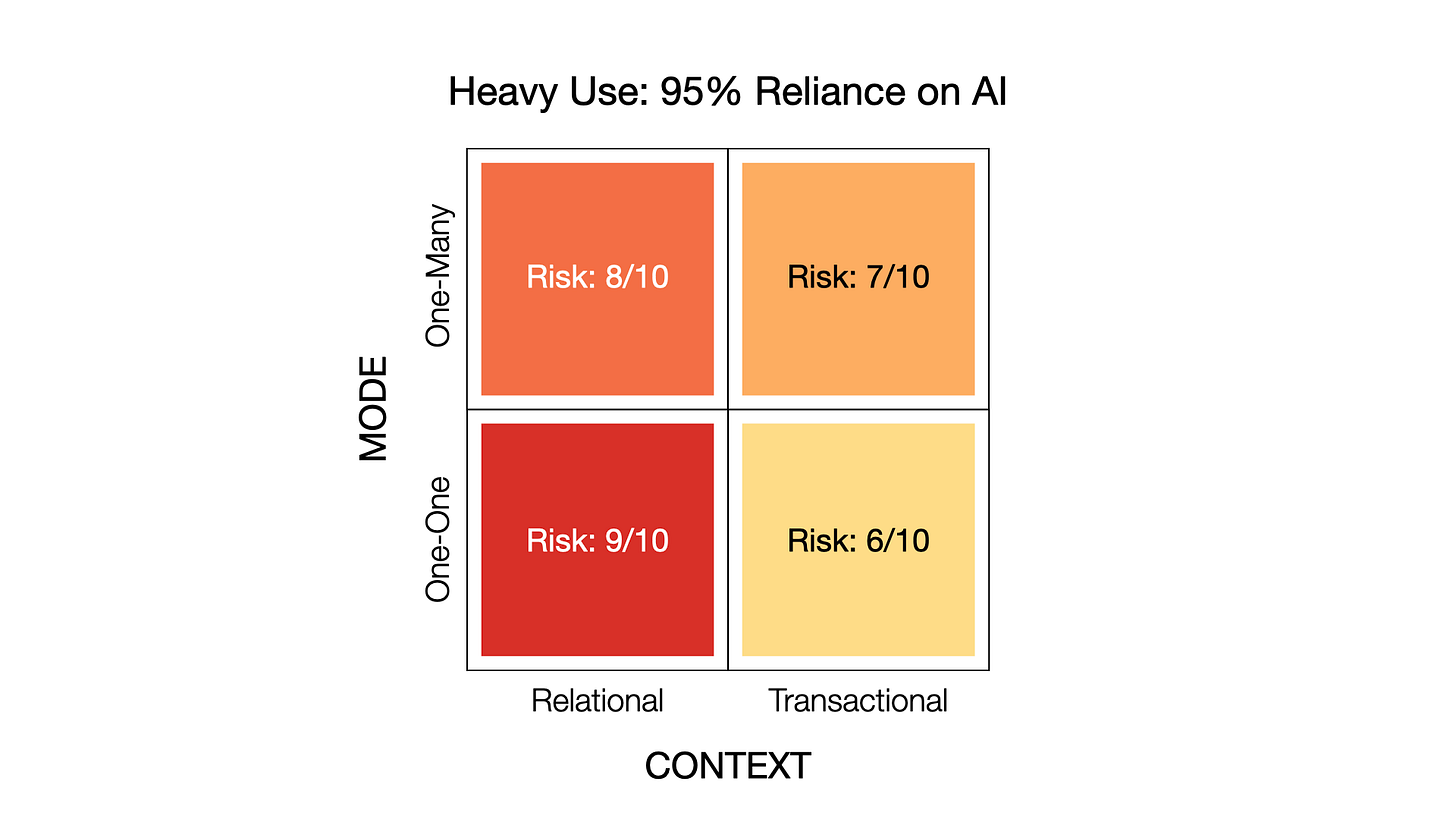

Heavy Use: 95% Reliance on AI

A heavy-use scenario, where the writer offloaded virtually all the work to an AI app, resulting in around 95% of the response being crafted by AI.

Relational • One‑to‑One (Overall 9/10)

Trust erosion — 9/10. Machine-written praise and feedback lack authentic specificity; recipients doubt sincerity and reinterpret past messages skeptically.

Perceived manipulation — 9/10. Algorithm-optimized “empathy” reads as engineered, especially across power differentials, prompting resistance or withdrawal.

Reputation cost — 8/10. The sender is seen as lazy or fake; colleagues stop confiding, and informal influence evaporates.

Authority delegitimization — 8/10. Guidance faces constant questioning; compliance becomes minimal rather than proactive.

Outsourcing relational replies to AI strips away genuine warmth and specificity. Even without obvious tells, recipients sense the templating—they feel managed, not heard. Trust plummets, taking compliance and candor with it. Feedback gets debated, follow-through stalls, and influence evaporates—particularly where power differentials already create sensitivity.

Relational • One‑to‑Many (Overall 8/10)

Cultural tone deafness — 7/10. Generic, sanitized, or “both-sides” language misreads context or excludes groups, causing unnecessary offense.

Reduced morale — 8/10. Hollow messaging undermines belonging and discretionary effort, especially during challenging times.

Perceived disengagement — 8/10. People recognize outsourced emotional labor, weakening leadership's symbolic authority and rallying power.

Credibility loss — 8/10. The institutional voice loses trust; future broadcasts potentially see poor uptake and need constant reinforcement.

AI-authored cultural messages ring hollow. Recipients recognize outsourced emotional labor, potentially triggering credibility collapse and morale decline. Generic phrasing misses affected groups or sanitizes difficult realities, breeding cynicism. Messages technically send but fail to move people; leaders need excessive follow-up, paying steep coordination costs for basic alignment.

Transactional • One‑to‑One (Overall 6/10)

Impersonal tone — 6/10. Overly formal, canned replies discourage cooperation; recipients feel like cogs, not colleagues.

Error propagation — 6/10. AI potentially introduces subtle factual errors that can cascade into delays and the need for corrective action.

Over-dependence — 5/10. Automating trivial exchanges signals carelessness; recipients start verifying everything.

Tone miscues — 5/10. Status and intent get mis-signaled (too formal, too casual, etc.), creating unnecessary friction.

Near-total automation in routine replies potentially breeds subtle errors and stiff tone. People double-check simple instructions, adding delays. Over time, the sender appears careless, and small mistakes compound into significant rework. Exchanges remain functional, but accumulating micro-friction erodes confidence in the sender's competence.

Transactional • One‑to‑Many (Overall 7/10)

Inaccuracy at scale — 7/10. Single errors become “official” for all recipients; corrections prove costly and uneven.

Authority dilution — 7/10. Obviously automated broadcasts undermine the perception of thoughtful, binding directives.

False efficiency — 7/10. Speed masks absent human review, potentially creating downstream problems.

Tone mismatch — 6/10. Robotic language obscures clarity and urgency, potentially undermining compliance and response rates.

AI-authored broadcasts risk authoritative errors that multiply across the organization. The automated voice potentially undermines urgency and compliance. Quick sends hide missing checks; corrections demand widespread follow-up. Tone mismatches further suppress response rates, turning “fast to ship” into “expensive to fix” through costly clean-up efforts.

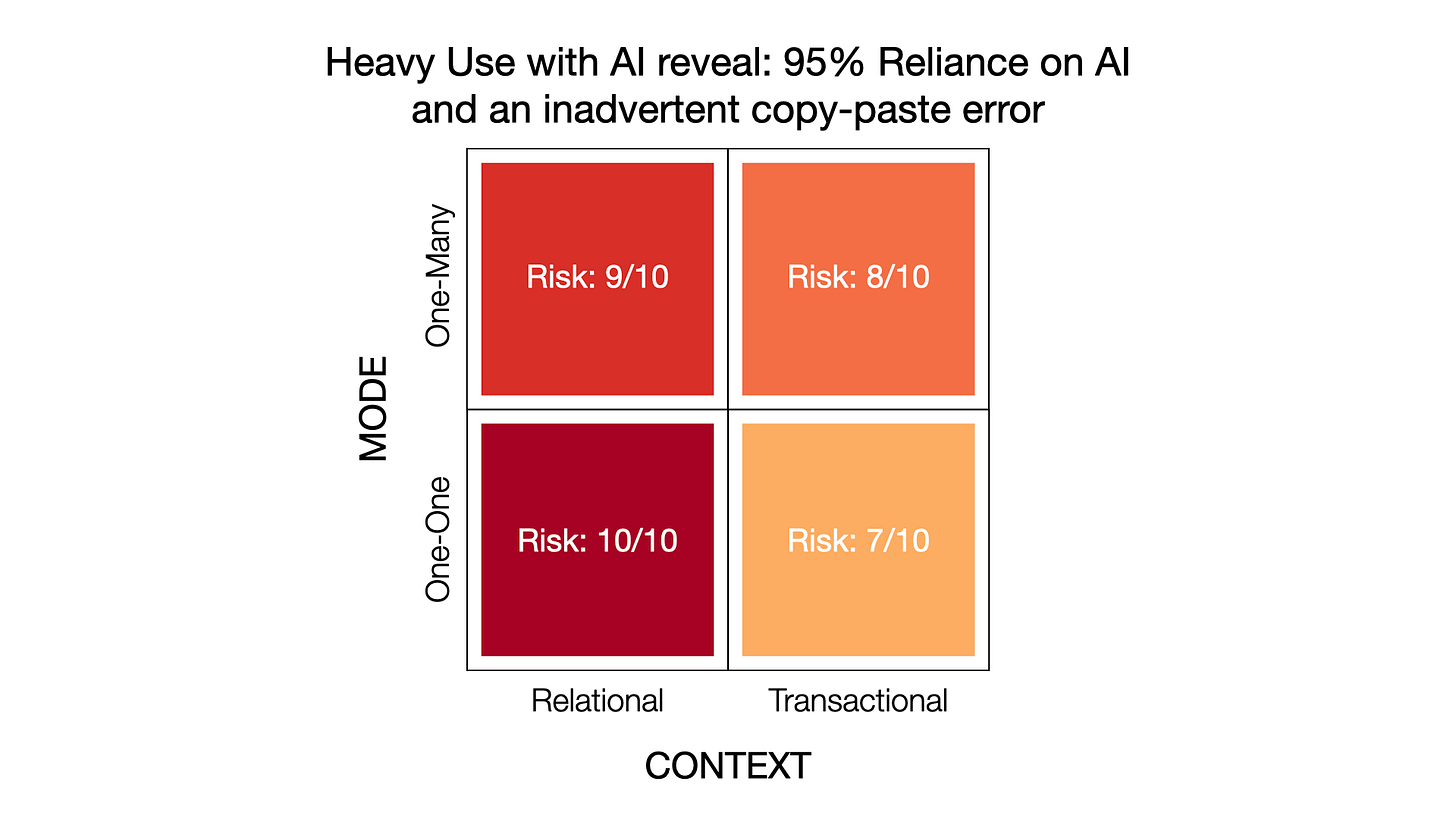

Heavy Use with AI reveal: 95% Reliance on AI and an inadvertent copy-paste error

A heavy-use scenario as in the previous scenario, but with an inadvertent reveal of AI use in the email reply.

Relational • One‑to‑One (Overall 10/10)

Trust erosion — 10/10. The exposed AI prompt reveals staged empathy; recipients feel betrayed and question all past and future messages.

Perceived manipulation — 10/10. Visible “tone-tuning” demonstrates deliberate emotional engineering, triggering anger or complete withdrawal.

Reputation cost — 9/10. Sender becomes known as insincere and lazy; peers stop confiding, and informal influence vanishes.

Authority delegitimization — 9/10. All guidance faces resistance; compliance drops to bare minimum while escalations spike.

Exposure transforms fragility into outright breach of trust. Recipients see proof the sender outsourced care and deliberately manipulated tone. Expect immediate defensiveness, passive resistance, and permanently damaged access to honest feedback. The sender's influence collapses, making sensitive matters nearly impossible to resolve via email.

Relational • One‑to‑Many (Overall 9/10)

Cultural tone deafness — 8/10. The exposed AI prompt clashes with lived reality, revealing canned sentiment and inviting widespread offense.

Reduced morale — 9/10. People realize leadership performed rather than demonstrated empathy; a sense of belonging and discretionary effort plummet.

Perceived disengagement — 9/10. Proof of outsourced emotional labor fuels widespread cynicism and contempt.

Credibility loss — 9/10. The institutional voice becomes actively distrusted; future broadcasts face open skepticism and require extraordinary effort to land.

Once the AI prompt leaks, the message becomes transparently performative. Recipients see leaders didn't engage with the moment, crushing morale and cementing cynicism. All subsequent communications carry negative weight, requiring extensive damage control and face-to-face interventions just to achieve baseline alignment—starting from a deep trust deficit.

Transactional • One‑to‑One (Overall 7/10)

Impersonal tone — 7/10. The reveal makes even routine replies feel deliberately robotic and uncaring.

Error propagation — 6/10. Recipients lose all faith in accuracy, double-checking everything and creating widespread delays.

Over-dependence — 7/10. Being “caught” using AI for simple tasks signals complete inattention to basics.

Tone miscues — 6/10. The exposed prompt reframes all awkwardness as deliberate manipulation, amplifying friction.

For simple exchanges, exposure proves both embarrassing and corrosive. People assume zero attention to detail and routinely verify everything. Minor tone issues now appear intentional, multiplying friction. Work continues, but constant verification erases any efficiency gains from automation.

Transactional • One‑to‑Many (Overall 8/10)

Inaccuracy at scale — 7/10. Trust in content collapses; recipients second-guess all facts, creating chaotic execution.

Authority dilution — 8/10. The automated appearance plus revelation destroys any sense of thoughtful, binding directives.

False efficiency — 8/10. Cleanup communications and clarifications multiply, far exceeding any initial time savings.

Tone mismatch — 7/10. Robotic language becomes glaringly obvious post-reveal, crushing credibility and response rates.

At scale, the reveal transforms “official” messages into suspect information. People hesitate, seek secondary confirmation, or follow conflicting interpretations. Authority evaporates, compliance and timeliness collapse. Multiple follow-up rounds become necessary to restore basic clarity, making the communication cycle far more resource-intensive than if humans had simply drafted carefully from the start.

Final Thoughts

I started this exploration/analysis as I’m coming across more and more people using AI to craft email responses, and the potential risks intrigued me—especially as I haven’t come across any clear guidance on how to navigate these use-cases.

I half expected them to end up being trivial, and was quite taken aback by how potentially serious some of the risks are in this analysis. So much so that I now believe that anyone using or potentially thinking about using AI apps to craft responses to emails should take this extremely seriously.

On reflection, I’m not surprised that there are potentially serious risks here—and even catastrophic ones. Any organization that relies on the relational connective tissue connecting its members to operate and thrive is vulnerable to actions that erode at trust and trustworthiness. And so in cases where relational communication is critical and is compromised, there are are going to be consequences.

I suspect that this is likely to be a lower risk in some organizations than others—and part of the analysis that I didn’t include here indicates this. For instance, a highly transactional corporation is less likely to be vulnerable to relational faux pas than a university for example, where pretty much everything is relational (at least as far as faculty are concerned). But even within corporations, people modulate how they work and behave based on relationships, and so even here eroding these can be problematic.

I suspect at this point that many folks using AI for their email responses haven’t considered the potential risks—and why should they if they haven’t been alerted to them. But at least by making them visible and providing some sense of where the potential vulnerabilities are, this framework and analysis will hopefully help people avoid mis-steps in the future that could come back and bite them—and their organization.

Because like it or not, these are risks that aren’t going away anytime soon.

Both this and the quotes above were generated by ChatGPT from a request for a response to an email I crafted to myself—just in case anyone was wondering!

Really appreciate this post. Like most others reading this, I imagine, I enjoy correspondence and look at it as opportunity for authenticity and rapport—so I write all of mine with no AI.

However, I also try to set aside my own bias as an English teacher and confident writer, as the downsides of a poorly-written, authentic email are heightened when writing isn't a strength for you.

That is where I think this post is most applicable: for those who have to weigh real downsides on either side. Cool approach/lens—much appreciated!

I write my own emails because relationships matter to me. (And I like writing.)

The only way I've so far figured to message this in-medium (rather than through meta commentary) is to keep email messages so brief that it would be *at least as much* effort to write the prompt, edit, and cut-and-paste.

At that point it becomes credible that I wrote the message myself because it was, in fact, the incentivized option! Of course, this limits the domain of discourse... but I suppose I prefer to use email for shorter communications and, say, a proper briefing note, memo, or position paper, for anything longer.