The Scared Witless Educator's Guide to Surviving ChatGPT GPT-5

If you're panicking over how to survive in world where every student has access to one of the most powerful AI's ever made, I have a plan ...

With the launch of ChatGPT GPT-5 last week, OpenAI put a wrench in the plans of every university lecturer, instructor and professor who is in AI denial, thinks that we’re still in 2022, or hasn’t quite got round to working out how to teach in a world where every AI-enabled students has the potential to be several steps ahead of them.1

As a result I’m anticipating a growing wave of panic as the new academic year starts, as instructors realize that they have no idea of how to survive the coming storm.

But I have a plan.

And it’s one that involves using GPT-5 to help you survive GPT-5!

The plan takes a feature of ChatGPT that’s designed to help students learn, and turns it on its head—using it instead to help educators learn to be better educators in an age AI:

GPT-5’s Study Mode.

Admittedly it’s not the sort of plan that’s likely to sit easily with faculty who have decided that AI is a moral abomination, or simply not their thing.

But believe me, it’s a good plan. And it’s one that you’ll thank me for one day.

The Plan

First open a free account with ChatGPT if you haven’t already (chat.com).

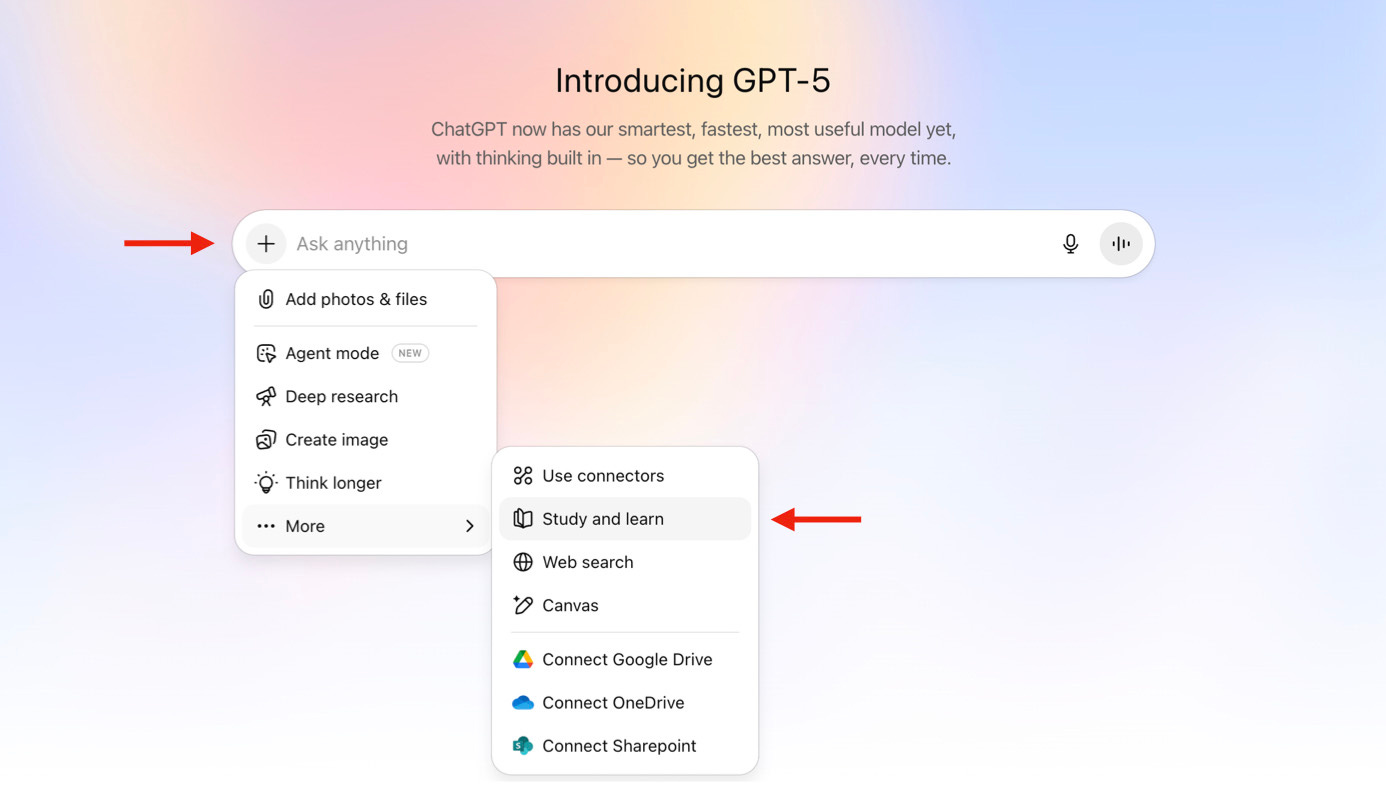

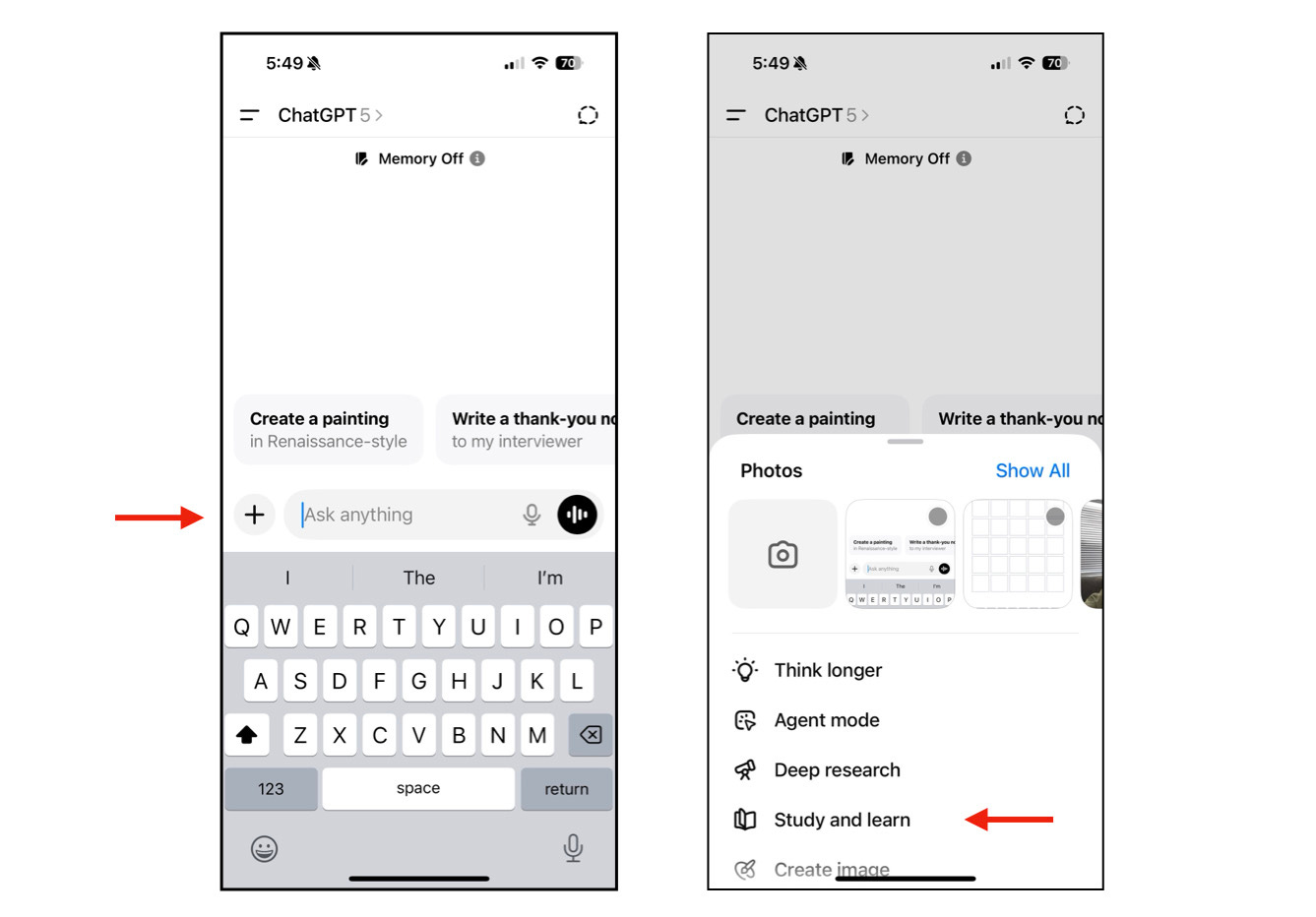

Second, place GPT-5 in Study Mode—this is important! The screen shots below show where you can find this as it’s a little buried.

And third, choose a prompt from below, cut and paste it into the Ask Anything box, and press submit!

The prompts:

I’m a senior professor with 20 years in the trenches. I never had formal teacher training, and frankly, I’m proud of that—you have not idea how good I am at what I do! So why on earth should I waste my time on this GPT-5 thing, instead of just doing what I know works?

I’m completely new to AI in the classroom. I teach undergrads, and I’m feeling increasingly out of my depth with AI. What’s the bare minimum I should know to not look clueless?

My students need to learn to think without relying on an algorithmic babysitter (I’m a university lecturer). They need to wrestle with real books, real papers, and real frustration—like every generation before them. Convince me GPT-5 isn’t just a crutch for the lazy.

I’ve been teaching longer than some of my undergrad students have been alive. I know what’s good for them—and it’s not an AI writing their essays. Tell me why I should even consider letting GPT-5 near my classroom.

My undergrad teaching methods work Nobody’s complained about them. And if I’m honest, I’m worried GPT-5 will undo decades of hard work. How do I protect my life’s work from becoming obsolete?

I run a tight ship. I don’t like surprises. Give me a step-by-step plan for preparing for students using GPT-5 in my courses.

Essays are the purest form of intellectual training (everyone knows that, right?). But my grad students are quietly outsourcing their “blood, sweat, and tears” writing to GPT-5. How do I get them to actually care about doing the real work themselves?

I’ve only heard horror stories about undergrad students using AI to cheat their way through courses. I teach two classes this semester and zero practical knowledge about GPT-5, or any form of AI. What’s my survival plan?

Don’t tell anyone, but I’m teaching an undergrad course this semester and I have no idea how to handle GPT-5. Please give me a discreet crash course before my students eat me alive.

AI is inherently unethical. And GPT-5 in the university classroom feels like inviting the fox into the henhouse. How do I stop my students from using it without me turning into a monster?

I’m open to using GPT-5 with my graduate students if it can enhance learning instead of dumbing them down. Give me a no-fluff, practical tutorial.

I didn’t sign up to be an IT help desk for my own classroom. How do I use GPT-5 without turning into a full-time AI troubleshooter?

Everyone knows AI hallucinates and makes things up. Why would I allow such a technology within a million miles of my classroom?

I’m convinced GPT-5 will make my students lazy, my colleagues redundant, and my subject irrelevant. Show me this isn’t the beginning of the end for higher education.

My students already think I’m out of touch. If I ban GPT-5, they’ll call me a dinosaur. If I embrace it, I’m basically teaching them to cheat. What do I do?

I have tenure. I don’t need GPT-5. Period.

I’m a university professor, I’ve been teaching for 20 years, and I’m so worried about GPT-5 that I don’t even know where to begin! Can you help?

That’s it.

… almost.

The serious stuff

I am, of course, being a little cheeky here, although I’ve been very impressed by how GPT-5 in Study Mode turn these prompts into genuinely useful interactive tutorials. And even though this mode was designed for students, it’s a no-brainer for educators to use it to learn how to be better educators in an age of AI.

Plus, it has the advantage of being infinitely patient, responsive, and private—you can ask it the things you desperately want know about AI in the classroom, but would never be seen dead asking in front of your colleagues.2

But there’s also an even more important issue at stake here: You cannot teach effectively in a class where students are using AI, asking questions about it, or exploring it, without having experienced it yourself.

Even if you reject AI on ethical, moral or ideological lines, you need to know what you’re talking about—rather than basing your perspectives on hearsay, assumptions, and out of date information.

This may sound obvious, but I’m surrounded by educators who still think that the AI of today is the same as it was three years ago, who assume that hyperbolic headlines about chatbots which happen to align with their views are reliable, and that tools like ChatGPT are simply the equivalent of modern day calculators.

All of these are dangerous—especially for educators—but GPT-5 is a bigger game changer for university classrooms that these might suggest:

Apart from being a surprisingly slick and immensely powerful tool, it’s freely available to anyone and everyone—including students. And this combination of easy access and hard-to-grasp-capabilities will enable undergrads and grads to run rings around any instructor who isn’t prepared.3

Translated into education-speak, this means that, whatever you’re teaching in class, your students will have more knowledge and insights at their fingertips than you could possibly have, all delivered faster than you can possibly teach.

It means that your AI-augmented students will be able to brainstorm, research, strategize, plan, and deliver on projects at a level that far surpasses what you can imagine.

It means that they’ll be able to write stunningly good essays and complete assignments at a level where the only way you can tell they are AI-assisted (or AI generated) is because they are so good. Forget what you’ve heard about hallucinations and stilted prose: GPT-5 is good—really good.

And it will mean that that you risk feeling like the least knowledgeable and equipped person in the room as AI reveals everything you wish you were, but fear you’re not.

I’m exaggerating a little here, but not a lot. And the reality is that most university educators are not equipped for this transition.

This is not your GPT-3.5 from 2022. It’s no longer a simple tool where you put in a prompt and get an error riddled response.4 Rather, it’s an immensely powerful tool that can do things far beyond what most people imagine.

And if you are an educator who’s scared witless faced with this, you probably should be.

Thankfully, there are ways to not only survive in a post GPT-5 classroom, but to thrive. And it’s both ironic and impressive that the tool that can help achieve this is the same one that’s fueling the challenges in the first place.

Happy Study Mode prompting!

Since its launch on August 7, there’s been a growing backlash against OpenAI around variable performance from GPT-5, a couple of embarrassing launch flubs, and the removal of access to older models (although OpenAI are now backtracking on this). Much of this is being generated by AI pundits who love a good fail, although many of their points are valid. Yet for the vast majority of users GPT-5 will be a game changer—all the more so as early teething problems are resolved.

Before someone reminds me that nothing submitted to ChatGPT is 100% private unless you’re using an enterprise version of the platform, I’m referring to the ability to have conversations that there’s an exceptionally slim chance that anyone else will find out about … unless you’re foolish enough to share them or leave your browser open!

It’s worth reading Reid Hoffman’s recent post on LinkedIn about GPT-5 where he types “ChatGPT may be the first AI that most of the 8 billion people on our planet use. They have the opportunity to make ChatGPT synonymous with AI. This gives OpenAI the ultimate surface area to deploy any bleeding-edge capability/superpower to billions of people.” https://www.linkedin.com/posts/reidhoffman_gpt-5-is-out-and-while-i-could-write-pages-activity-7359329865600286720-ePy5/

I’m amazed at how many people tell me that ChatGPT (and similar tools) simply make stuff up and hallucinate, and so cannot be trusted. Yes they do. And yes, there’s a level of AI literacy that’s needed in order to navigate this. And yet the hallucination rates of an AI model like GPT-5 are massively lower than the original GPT 3.5, to the point where to dismiss these tools on the basis of hallucinations is simply naive. And this is in part due to architecture that carry out internal checks and balances to help ensure the accuracy of responses.

Thank you. I’ll be teaching intro to computer science and intro to philosophy this semester, and I only have six months teaching experience, back when rephrasing tools and CourseHero.com seemed to be the most common ways to cheat. I’ll try your plan!

This is for the educators living under mossy rocks? This feels neither cheeky nor particularly relevant. If the choir you’re preaching to here isn’t far and away ahead of your “advice”, they likely shouldn’t be teaching in 2025. 🤷♂️